本文基於 Java 11+實現

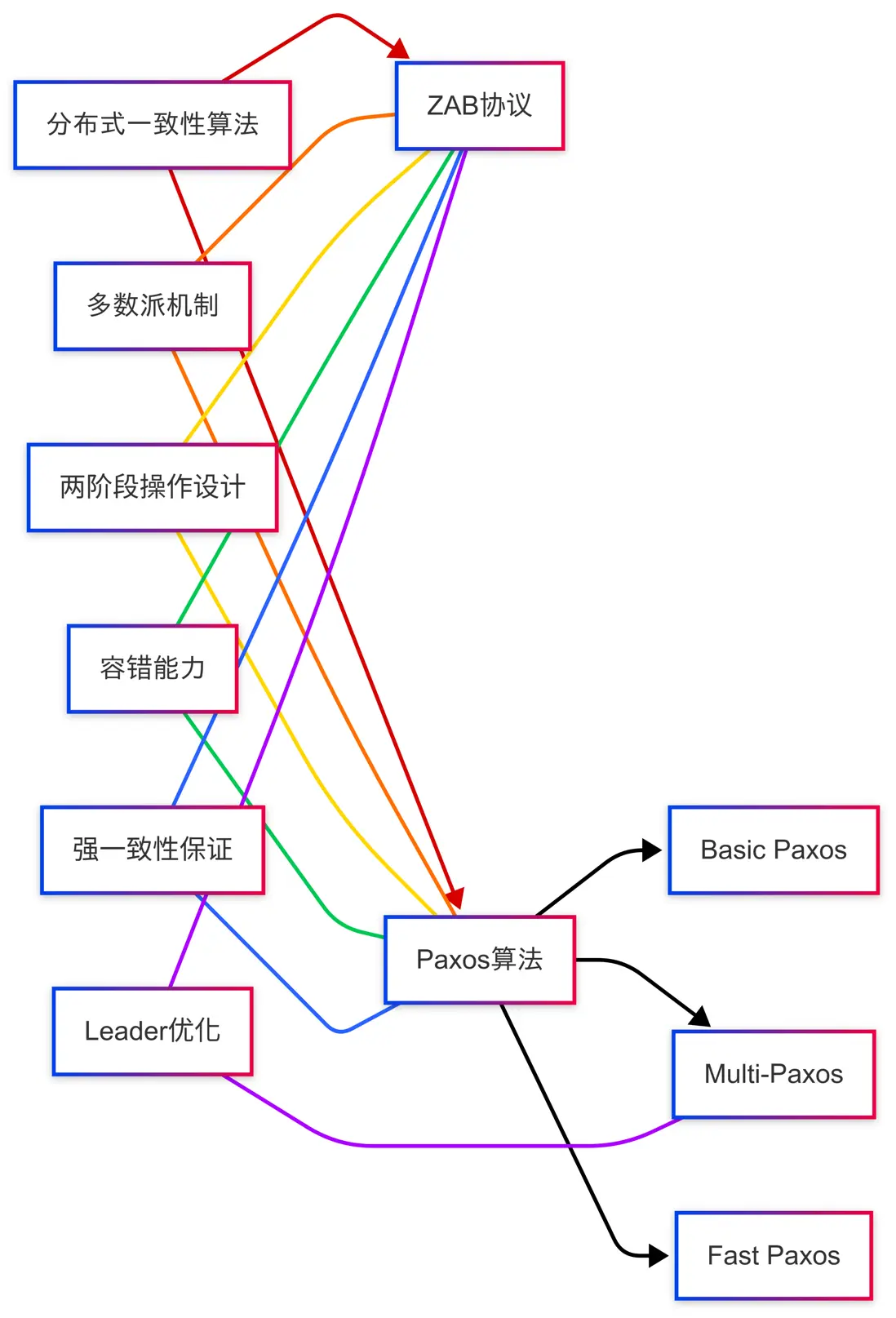

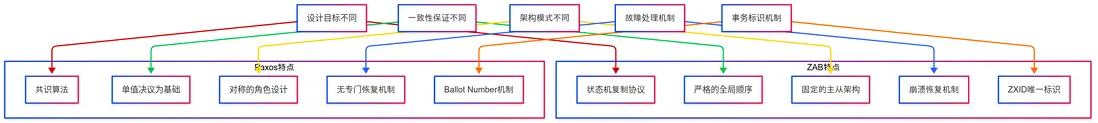

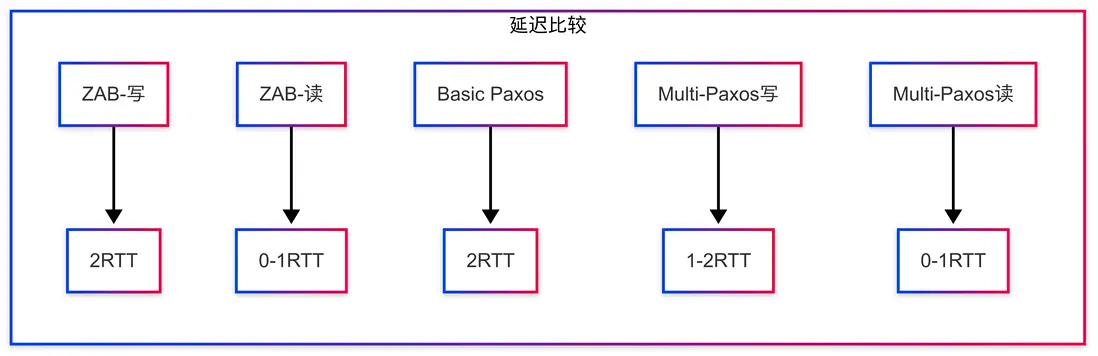

構建可靠的分佈式系統時,一致性問題是核心挑戰之一。ZooKeeper 的 ZAB 協議和 Paxos 算法作為兩種主流解決方案,在理論基礎和工程實現上各有特點。本文深入分析它們的實現機制、性能特性和最佳實踐。

一、基本概念

ZAB 協議

ZAB (ZooKeeper Atomic Broadcast) 是專為 ZooKeeper 設計的分佈式一致性協議,核心目標是保證分佈式系統中數據更新的原子性和順序一致性。

Paxos 算法

Paxos 是 Leslie Lamport 提出的通用分佈式一致性算法,是眾多分佈式系統的理論基礎,解決的是在不可靠網絡中如何達成共識的問題。

二、ZAB 協議實現

ZAB 協議工作在兩種模式下:

- 恢復模式:系統啓動或 Leader 崩潰時觸發

- 廣播模式:正常運行時處理寫請求

核心接口定義

public interface ZabProcessor {

// 恢復模式接口

boolean startRecovery() throws RecoveryException;

// 廣播模式接口

CompletableFuture<Boolean> processWrite(Request request);

CompletableFuture<Result> processRead(String key, ConsistencyLevel level);

// 狀態查詢接口

boolean isLeader();

long getCurrentEpoch();

}

public interface NetworkClient {

// 基礎網絡通信接口

void connect(String serverId, String address, int port) throws IOException;

void disconnect(String serverId);

// ZAB協議消息

ACK sendProposal(String serverId, ProposalPacket proposal) throws IOException;

void sendCommit(String serverId, CommitPacket commit) throws IOException;

LastZxidResponse sendEpochRequest(String serverId, EpochPacket epochPkt) throws IOException;

boolean sendTruncate(String serverId, TruncatePacket truncPkt) throws IOException;

boolean sendTransactions(String serverId, List<Transaction> txns) throws IOException;

boolean sendNewLeader(String serverId, NewLeaderPacket newLeaderPkt) throws IOException;

void sendHeartbeat(String serverId, long zxid) throws IOException;

void sendSnapshot(String serverId, byte[] snapshot, long zxid) throws IOException;

}

public interface StateMachine {

void apply(long zxid, byte[] command) throws Exception;

long getLastAppliedZxid();

byte[] takeSnapshot() throws Exception;

void restoreSnapshot(byte[] snapshot, long zxid) throws Exception;

}ZAB 恢復模式實現

public class ZABRecovery {

private final AtomicLong zxid = new AtomicLong(0);

private final AtomicInteger epoch = new AtomicInteger(0);

private volatile ServerState state = ServerState.LOOKING;

private final Logger logger = LoggerFactory.getLogger(ZABRecovery.class);

private final ConcurrentMap<String, ServerData> serverDataMap;

private final int quorumSize;

private final NetworkClient networkClient;

private final StateMachine stateMachine;

private final String serverId;

// 構造函數

public ZABRecovery(String serverId, int quorumSize, NetworkClient networkClient,

StateMachine stateMachine) {

this.serverId = serverId;

this.quorumSize = quorumSize;

this.networkClient = networkClient;

this.stateMachine = stateMachine;

this.serverDataMap = new ConcurrentHashMap<>();

}

// Leader恢復流程

public boolean startRecovery() throws RecoveryException {

MDC.put("component", "zab-recovery");

MDC.put("serverId", serverId);

try {

// 1. 更新選舉輪次

int newEpoch = epoch.incrementAndGet();

logger.info("Starting recovery with epoch: {}", newEpoch);

// 2. 發現階段:收集所有Follower狀態

Map<Long, Set<String>> commitMap = discoverFollowerStates();

// 3. 確定截斷點和提交點

long truncateZxid = determineMaxCommittedZxid(commitMap);

logger.info("Determined truncate zxid: {}", Long.toHexString(truncateZxid));

// 4. 解決可能的衝突(腦裂後)

resolveConflictsAfterPartition(truncateZxid, commitMap);

// 5. 同步階段:將歷史事務同步給Follower

syncFollowers(truncateZxid);

// 6. 切換到廣播模式

state = ServerState.LEADING;

logger.info("Recovery completed, switching to broadcast mode");

return true;

} catch (IOException e) {

logger.error("Recovery failed due to I/O error", e);

state = ServerState.LOOKING;

throw new RecoveryException("I/O error during recovery", e);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Recovery interrupted", e);

state = ServerState.LOOKING;

throw new RecoveryException("Recovery process interrupted", e);

} catch (Exception e) {

logger.error("Unexpected error during recovery", e);

state = ServerState.LOOKING;

throw new RecoveryException("Unexpected error during recovery", e);

} finally {

MDC.remove("component");

MDC.remove("serverId");

}

}

// 發現階段:收集所有Follower的最新事務信息

private Map<Long, Set<String>> discoverFollowerStates() throws IOException, InterruptedException {

Map<Long, Set<String>> acceptedZxids = new ConcurrentHashMap<>();

CountDownLatch latch = new CountDownLatch(serverDataMap.size());

List<CompletableFuture<?>> futures = new ArrayList<>();

// 向所有Follower發送CEPOCH消息

for (var entry : serverDataMap.entrySet()) {

final String targetServerId = entry.getKey();

final ServerData serverData = entry.getValue();

CompletableFuture<Void> future = CompletableFuture.runAsync(() -> {

MDC.put("targetServerId", targetServerId);

try {

// 發送新的epoch

EpochPacket epochPkt = new EpochPacket(epoch.get());

LastZxidResponse response = networkClient.sendEpochRequest(

targetServerId, epochPkt);

// 記錄該服務器的最新zxid

synchronized (acceptedZxids) {

acceptedZxids.computeIfAbsent(response.getLastZxid(), k -> new HashSet<>())

.add(targetServerId);

}

logger.info("Server {} last zxid: {}", targetServerId,

Long.toHexString(response.getLastZxid()));

} catch (IOException e) {

logger.error("Failed to discover state from server: {}", targetServerId, e);

} finally {

MDC.remove("targetServerId");

latch.countDown();

}

});

futures.add(future);

}

// 等待大多數響應或超時

if (!latch.await(10, TimeUnit.SECONDS)) {

logger.warn("Discovery phase timed out, proceeding with available responses");

}

// 取消未完成的任務

for (CompletableFuture<?> future : futures) {

if (!future.isDone()) {

future.cancel(true);

}

}

return acceptedZxids;

}

// 確定需要保留的最大已提交事務ID

private long determineMaxCommittedZxid(Map<Long, Set<String>> commitMap) {

// 尋找被多數派確認的最大ZXID

long maxZxid = 0;

int quorum = getQuorum();

for (var entry : commitMap.entrySet()) {

if (entry.getValue().size() >= quorum && entry.getKey() > maxZxid) {

maxZxid = entry.getKey();

}

}

return maxZxid;

}

// 解決網絡分區後可能的數據衝突

private void resolveConflictsAfterPartition(long truncateZxid,

Map<Long, Set<String>> commitMap) {

logger.info("Checking for potential conflicts after network partition");

// 1. 識別潛在衝突事務 - 那些不在多數派中的更高zxid

List<ConflictingTransaction> conflicts = new ArrayList<>();

for (var entry : commitMap.entrySet()) {

long txnZxid = entry.getKey();

Set<String> servers = entry.getValue();

// 如果zxid大於已確定的截斷點,但不是多數派確認的

if (txnZxid > truncateZxid && servers.size() < getQuorum()) {

// 獲取事務的epoch

int txnEpoch = ZxidUtils.getEpochFromZxid(txnZxid);

int truncateEpoch = ZxidUtils.getEpochFromZxid(truncateZxid);

conflicts.add(new ConflictingTransaction(txnZxid, truncateZxid,

txnEpoch, truncateEpoch,

servers));

}

}

// 2. 處理衝突

if (!conflicts.isEmpty()) {

logger.warn("Found {} potential conflicting transactions after partition",

conflicts.size());

for (ConflictingTransaction conflict : conflicts) {

if (conflict.isFromHigherEpoch()) {

logger.warn("Conflict: transaction with zxid {} from higher epoch {} " +

"found but not in majority. Will be discarded.",

Long.toHexString(conflict.getConflictZxid()),

conflict.getConflictEpoch());

} else {

logger.warn("Conflict: transaction with zxid {} from same epoch {} " +

"found but not in majority. Will be discarded.",

Long.toHexString(conflict.getConflictZxid()),

conflict.getConflictEpoch());

}

// 通知這些服務器截斷這些事務

notifyServersToTruncate(conflict.getServers(), truncateZxid);

}

} else {

logger.info("No conflicting transactions found");

}

}

// 通知服務器截斷超出安全點的事務

private void notifyServersToTruncate(Set<String> servers, long truncateZxid) {

for (String serverId : servers) {

CompletableFuture.runAsync(() -> {

try {

TruncatePacket truncPkt = new TruncatePacket(truncateZxid);

boolean success = networkClient.sendTruncate(serverId, truncPkt);

if (success) {

logger.info("Successfully notified server {} to truncate to zxid {}",

serverId, Long.toHexString(truncateZxid));

} else {

logger.warn("Failed to notify server {} to truncate", serverId);

}

} catch (IOException e) {

logger.error("Error notifying server {} to truncate", serverId, e);

}

});

}

}

// 同步階段:將歷史事務同步給Follower

private void syncFollowers(long truncateZxid) throws IOException, InterruptedException {

// 獲取從truncateZxid開始的所有事務

List<Transaction> txns = loadTransactionsFromLog(truncateZxid);

logger.info("Syncing {} transactions to followers", txns.size());

// 並行同步給所有Follower

CountDownLatch syncLatch = new CountDownLatch(serverDataMap.size());

AtomicInteger successCount = new AtomicInteger(0);

List<CompletableFuture<?>> futures = new ArrayList<>();

for (var entry : serverDataMap.entrySet()) {

final String targetServerId = entry.getKey();

final ServerData serverData = entry.getValue();

CompletableFuture<Void> future = CompletableFuture.runAsync(() -> {

MDC.put("targetServerId", targetServerId);

try {

// 檢查Follower是否需要使用快照追趕

long followerZxid = serverData.getLastZxid();

if (truncateZxid - followerZxid > SNAPSHOT_THRESHOLD) {

syncFollowerWithSnapshot(targetServerId, followerZxid);

} else {

// 1. 發送TRUNC命令,通知Follower截斷日誌

TruncatePacket truncPkt = new TruncatePacket(truncateZxid);

if (networkClient.sendTruncate(targetServerId, truncPkt)) {

// 2. 發送DIFF命令,同步缺失的事務

if (networkClient.sendTransactions(targetServerId, txns)) {

// 3. 發送NEWLEADER命令,確認同步完成

NewLeaderPacket newLeaderPkt = new NewLeaderPacket(epoch.get());

if (networkClient.sendNewLeader(targetServerId, newLeaderPkt)) {

// 同步成功

successCount.incrementAndGet();

logger.info("Successfully synced server: {}", targetServerId);

}

}

}

}

} catch (IOException e) {

logger.error("Failed to sync server {} with {} transactions, last zxid: {}",

targetServerId, txns.size(), Long.toHexString(truncateZxid), e);

} finally {

MDC.remove("targetServerId");

syncLatch.countDown();

}

});

futures.add(future);

}

// 等待同步完成或超時

if (!syncLatch.await(30, TimeUnit.SECONDS)) {

logger.warn("Sync phase timed out");

}

// 取消未完成的任務

for (CompletableFuture<?> future : futures) {

if (!future.isDone()) {

future.cancel(true);

}

}

// 檢查是否有足夠的服務器同步成功

if (successCount.get() < quorumSize) {

throw new QuorumNotFoundException("Failed to sync with quorum of followers",

successCount.get(), quorumSize);

}

}

// 使用快照同步落後太多的Follower

private void syncFollowerWithSnapshot(String followerId, long followerZxid) throws IOException {

try {

logger.info("Follower {} is too far behind (zxid: {}), syncing with snapshot",

followerId, Long.toHexString(followerZxid));

// 1. 獲取當前狀態快照

byte[] snapshot = stateMachine.takeSnapshot();

// 2. 發送快照給Follower

networkClient.sendSnapshot(followerId, snapshot, zxid.get());

logger.info("Successfully sent snapshot to follower: {}", followerId);

} catch (Exception e) {

logger.error("Failed to sync follower {} with snapshot", followerId, e);

throw new IOException("Snapshot sync failed", e);

}

}

// 從事務日誌加載事務

private List<Transaction> loadTransactionsFromLog(long fromZxid) throws IOException {

List<Transaction> result = new ArrayList<>();

// 實際實現會從持久化存儲讀取事務記錄

logger.info("Loading transactions starting from zxid: {}", Long.toHexString(fromZxid));

return result;

}

private int getQuorum() {

return quorumSize / 2 + 1;

}

// 常量定義

private static final long SNAPSHOT_THRESHOLD = 100000; // 事務差距超過10萬時使用快照

// 衝突事務數據結構

static class ConflictingTransaction {

private final long conflictZxid;

private final long truncateZxid;

private final int conflictEpoch;

private final int truncateEpoch;

private final Set<String> servers;

public ConflictingTransaction(long conflictZxid, long truncateZxid,

int conflictEpoch, int truncateEpoch,

Set<String> servers) {

this.conflictZxid = conflictZxid;

this.truncateZxid = truncateZxid;

this.conflictEpoch = conflictEpoch;

this.truncateEpoch = truncateEpoch;

this.servers = new HashSet<>(servers);

}

public boolean isFromHigherEpoch() {

return conflictEpoch > truncateEpoch;

}

public long getConflictZxid() {

return conflictZxid;

}

public int getConflictEpoch() {

return conflictEpoch;

}

public Set<String> getServers() {

return Collections.unmodifiableSet(servers);

}

}

// 其他內部類定義...

enum ServerState {

LOOKING, // 尋找Leader

FOLLOWING, // Follower角色

LEADING // Leader角色

}

}ZAB 廣播模式實現

public class ZABBroadcast implements AutoCloseable {

private final AtomicLong zxid;

private final AtomicInteger epoch;

private final ConcurrentMap<String, ServerData> followers;

private final Logger logger = LoggerFactory.getLogger(ZABBroadcast.class);

private final CircuitBreaker circuitBreaker;

private final NetworkClient networkClient;

private final StateMachine stateMachine;

private final String serverId;

private final ReadWriteLock rwLock = new ReentrantReadWriteLock();

private final ScheduledExecutorService scheduler;

private final MetricsCollector metrics;

private final RateLimiter heartbeatLogLimiter = RateLimiter.create(0.1); // 每10秒最多一條日誌

public ZABBroadcast(String serverId, AtomicLong zxid, AtomicInteger epoch,

NetworkClient networkClient, StateMachine stateMachine) {

this.serverId = serverId;

this.zxid = zxid;

this.epoch = epoch;

this.networkClient = networkClient;

this.stateMachine = stateMachine;

this.followers = new ConcurrentHashMap<>();

this.circuitBreaker = new CircuitBreaker(5, 10000); // 5次失敗,10秒重置

this.scheduler = Executors.newScheduledThreadPool(2, r -> {

Thread t = new Thread(r, "zab-scheduler-" + serverId);

t.setDaemon(true);

return t;

});

this.metrics = new MetricsCollector("zab_broadcast");

// 啓動心跳任務

scheduler.scheduleWithFixedDelay(this::sendHeartbeats,

500, 500, TimeUnit.MILLISECONDS);

}

// 添加Follower

public void addFollower(ServerData follower) {

followers.put(follower.getId(), follower);

}

// Leader處理寫請求

public CompletableFuture<Boolean> processWrite(Request request) {

Stopwatch stopwatch = Stopwatch.createStarted();

MDC.put("component", "zab-broadcast");

MDC.put("serverId", serverId);

MDC.put("requestId", request.getId());

try {

return GlobalExceptionHandler.withExceptionHandling(

circuitBreaker.execute(() -> {

try {

// 1. 為請求生成zxid (高32位是epoch,低32位是計數器)

long newZxid = createNewZxid();

MDC.put("zxid", Long.toHexString(newZxid));

logger.info("Processing write request: {} with zxid: {}",

request.getId(), Long.toHexString(newZxid));

// 2. 將請求發送給所有Follower

List<Future<ACK>> futures = sendToFollowers(request, newZxid);

// 3. 等待過半Follower的ACK

if (waitForMajority(futures)) {

// 4. 通知所有Follower提交事務

commit(newZxid);

logger.info("Request {} committed successfully", request.getId());

// 5. 記錄指標

metrics.recordSuccessfulWrite(stopwatch.elapsed(TimeUnit.MILLISECONDS));

return CompletableFuture.completedFuture(true);

} else {

logger.warn("Failed to get majority ACKs for request {}", request.getId());

metrics.recordFailedWrite();

return CompletableFuture.completedFuture(false);

}

} catch (IOException e) {

logger.error("Failed to process write request: {}", request.getId(), e);

metrics.recordFailedWrite();

return CompletableFuture.failedFuture(

new ProcessingException("Failed to process write request", e));

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Interrupted while processing write request: {}", request.getId(), e);

metrics.recordFailedWrite();

return CompletableFuture.failedFuture(

new ProcessingException("Interrupted during write processing", e));

}

})

);

} catch (CircuitBreakerOpenException e) {

logger.error("Circuit breaker is open, rejecting request: {}", request.getId());

metrics.recordRejectedWrite();

return CompletableFuture.failedFuture(

new ProcessingException("Circuit breaker open, system overloaded", e));

} finally {

MDC.remove("component");

MDC.remove("serverId");

MDC.remove("requestId");

MDC.remove("zxid");

}

}

// 處理批量寫請求,提高吞吐量

public CompletableFuture<Map<String, Boolean>> processBatchWrite(List<Request> requests) {

if (requests.isEmpty()) {

return CompletableFuture.completedFuture(Collections.emptyMap());

}

Stopwatch stopwatch = Stopwatch.createStarted();

MDC.put("component", "zab-broadcast");

MDC.put("serverId", serverId);

MDC.put("batchSize", String.valueOf(requests.size()));

try {

return GlobalExceptionHandler.withExceptionHandling(

circuitBreaker.execute(() -> {

Map<String, Boolean> results = new HashMap<>();

try {

// 創建批處理包

BatchRequest batch = new BatchRequest();

for (Request req : requests) {

batch.addRequest(req);

results.put(req.getId(), false); // 默認失敗

}

// 為批次生成一個zxid

long batchZxid = createNewZxid();

MDC.put("zxid", Long.toHexString(batchZxid));

logger.info("Processing batch of {} requests with zxid: {}",

requests.size(), Long.toHexString(batchZxid));

// 發送批處理請求給所有Follower

List<Future<ACK>> futures = sendBatchToFollowers(batch, batchZxid);

// 等待多數派確認

if (waitForMajority(futures)) {

// 提交批次

commitBatch(batchZxid);

logger.info("Batch with {} requests committed successfully", requests.size());

// 設置所有請求結果為成功

for (Request req : requests) {

results.put(req.getId(), true);

}

metrics.recordSuccessfulBatchWrite(

requests.size(), stopwatch.elapsed(TimeUnit.MILLISECONDS));

} else {

logger.warn("Failed to get majority ACKs for batch");

metrics.recordFailedBatchWrite(requests.size());

}

} catch (Exception e) {

logger.error("Error processing batch write of {} requests", requests.size(), e);

metrics.recordFailedBatchWrite(requests.size());

}

return CompletableFuture.completedFuture(results);

})

);

} catch (CircuitBreakerOpenException e) {

logger.error("Circuit breaker is open, rejecting batch of {} requests", requests.size());

metrics.recordRejectedBatchWrite(requests.size());

Map<String, Boolean> results = new HashMap<>();

for (Request req : requests) {

results.put(req.getId(), false);

}

return CompletableFuture.failedFuture(

new ProcessingException("Circuit breaker open, system overloaded", e));

} finally {

MDC.remove("component");

MDC.remove("serverId");

MDC.remove("batchSize");

MDC.remove("zxid");

}

}

// 讀取操作的一致性保證

public CompletableFuture<Result> readWithConsistency(String key, ConsistencyLevel level) {

Stopwatch stopwatch = Stopwatch.createStarted();

MDC.put("component", "zab-broadcast");

MDC.put("serverId", serverId);

MDC.put("key", key);

MDC.put("consistency", level.name());

try {

ReadStrategy strategy = readStrategies.getOrDefault(

level, readStrategies.get(ConsistencyLevel.EVENTUAL));

CompletableFuture<Result> result = strategy.execute(key, this::readLocal);

result.thenAccept(r ->

metrics.recordRead(level, stopwatch.elapsed(TimeUnit.MILLISECONDS)));

return result;

} catch (Exception e) {

logger.error("Error performing {} read for key: {}", level, key, e);

metrics.recordFailedRead(level);

return CompletableFuture.failedFuture(

new ProcessingException("Read operation failed", e));

} finally {

MDC.remove("component");

MDC.remove("serverId");

MDC.remove("key");

MDC.remove("consistency");

}

}

// 本地讀取數據

private Result readLocal(String key) {

rwLock.readLock().lock();

try {

// 實際實現會從本地數據庫讀取

return new Result(key, "value", true);

} finally {

rwLock.readLock().unlock();

}

}

// 生成新的zxid,處理溢出情況

private long createNewZxid() {

rwLock.writeLock().lock();

try {

long currentCounter = zxid.get() & 0xFFFFFFFFL;

// 檢測溢出並處理

if (currentCounter >= 0xFFFFFFFFL) {

// 計數器即將溢出,增加epoch

int newEpoch = epoch.incrementAndGet();

logger.warn("ZXID counter overflow, incrementing epoch to {}", newEpoch);

long newZxid = ((long)newEpoch << 32); // 重置計數器

zxid.set(newZxid);

return newZxid;

}

return zxid.incrementAndGet();

} finally {

rwLock.writeLock().unlock();

}

}

// 發送提案給所有Follower

private List<Future<ACK>> sendToFollowers(Request request, long newZxid)

throws IOException {

List<Future<ACK>> futures = new ArrayList<>();

ProposalPacket proposal = new ProposalPacket(newZxid, request);

ExecutorService executor = Executors.newFixedThreadPool(followers.size(),

r -> {

Thread t = new Thread(r, "proposal-sender-" + serverId);

t.setDaemon(true);

return t;

});

try {

for (var entry : followers.entrySet()) {

final String targetServerId = entry.getKey();

futures.add(executor.submit(() -> {

MDC.put("targetServerId", targetServerId);

try {

ACK ack = networkClient.sendProposal(targetServerId, proposal);

logger.debug("Received ACK from {} for zxid {}",

targetServerId, Long.toHexString(newZxid));

return ack;

} catch (IOException e) {

logger.error("Failed to send proposal to follower {}, zxid: {}",

targetServerId, Long.toHexString(newZxid), e);

return null;

} finally {

MDC.remove("targetServerId");

}

}));

}

} finally {

executor.shutdown();

try {

if (!executor.awaitTermination(200, TimeUnit.MILLISECONDS)) {

List<Runnable> pendingTasks = executor.shutdownNow();

logger.warn("Force shutdown executor with {} pending tasks", pendingTasks.size());

}

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Interrupted while waiting for executor to terminate");

}

}

return futures;

}

// 等待多數派響應

private boolean waitForMajority(List<Future<ACK>> futures)

throws InterruptedException {

int ackCount = 0;

int majority = (followers.size() / 2) + 1;

for (Future<ACK> future : futures) {

try {

ACK ack = future.get(5, TimeUnit.SECONDS);

if (ack != null && ack.isSuccess()) {

ackCount++;

if (ackCount >= majority) {

// 已獲得多數派確認,可以提前返回

return true;

}

}

} catch (ExecutionException e) {

logger.warn("Error getting ACK", e.getCause());

} catch (TimeoutException e) {

logger.warn("Timeout waiting for ACK");

}

}

return ackCount >= majority;

}

// 通知所有Follower提交事務

private void commit(long zxid) throws IOException {

CommitPacket commit = new CommitPacket(zxid);

for (var entry : followers.entrySet()) {

final String targetServerId = entry.getKey();

CompletableFuture.runAsync(() -> {

MDC.put("targetServerId", targetServerId);

try {

networkClient.sendCommit(targetServerId, commit);

logger.debug("Sent commit to {} for zxid {}",

targetServerId, Long.toHexString(zxid));

} catch (IOException e) {

logger.error("Failed to send commit to follower {}, zxid: {}",

targetServerId, Long.toHexString(zxid), e);

} finally {

MDC.remove("targetServerId");

}

});

}

}

// 發送批處理請求

private List<Future<ACK>> sendBatchToFollowers(BatchRequest batch, long batchZxid)

throws IOException {

ProposalPacket proposal = new ProposalPacket(batchZxid, batch);

return sendProposalToFollowers(proposal, batchZxid);

}

// 提交批處理請求

private void commitBatch(long batchZxid) throws IOException {

commit(batchZxid);

}

// 發送心跳給所有Follower

private void sendHeartbeats() {

long currentZxid = zxid.get();

for (var entry : followers.entrySet()) {

final String targetServerId = entry.getKey();

CompletableFuture.runAsync(() -> {

try {

networkClient.sendHeartbeat(targetServerId, currentZxid);

} catch (IOException e) {

// 心跳失敗,使用限流器避免日誌氾濫

if (heartbeatLogLimiter.tryAcquire()) {

logger.debug("Failed to send heartbeat to {}", targetServerId, e);

}

}

});

}

}

// 發送提案給所有Follower(通用方法)

private List<Future<ACK>> sendProposalToFollowers(ProposalPacket proposal, long zxid)

throws IOException {

List<Future<ACK>> futures = new ArrayList<>();

ExecutorService executor = Executors.newFixedThreadPool(followers.size(),

r -> {

Thread t = new Thread(r, "proposal-sender-" + serverId);

t.setDaemon(true);

return t;

});

try {

for (var entry : followers.entrySet()) {

final String targetServerId = entry.getKey();

futures.add(executor.submit(() -> {

MDC.put("targetServerId", targetServerId);

try {

ACK ack = networkClient.sendProposal(targetServerId, proposal);

logger.debug("Received ACK from {} for zxid {}",

targetServerId, Long.toHexString(zxid));

return ack;

} catch (IOException e) {

logger.error("Failed to send proposal to follower {}, zxid: {}",

targetServerId, Long.toHexString(zxid), e);

return null;

} finally {

MDC.remove("targetServerId");

}

}));

}

} finally {

executor.shutdown();

try {

if (!executor.awaitTermination(200, TimeUnit.MILLISECONDS)) {

List<Runnable> pendingTasks = executor.shutdownNow();

logger.warn("Force shutdown executor with {} pending tasks", pendingTasks.size());

}

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Interrupted while waiting for executor to terminate");

}

}

return futures;

}

// 定義讀取策略接口和實現

private interface ReadStrategy {

CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal);

}

private final Map<ConsistencyLevel, ReadStrategy> readStrategies = new EnumMap<>(ConsistencyLevel.class);

{

// 初始化讀取策略

readStrategies.put(ConsistencyLevel.LINEARIZABLE, new LinearizableReadStrategy());

readStrategies.put(ConsistencyLevel.SEQUENTIAL, new SequentialReadStrategy());

readStrategies.put(ConsistencyLevel.READ_YOUR_WRITES, new ReadYourWritesStrategy());

readStrategies.put(ConsistencyLevel.BOUNDED_STALENESS, new BoundedStalenessStrategy());

readStrategies.put(ConsistencyLevel.EVENTUAL, new EventualReadStrategy());

}

// 線性一致性讀取策略

private class LinearizableReadStrategy implements ReadStrategy {

private final AtomicLong leaseExpirationTime = new AtomicLong(0);

private final long leaderLeaseMs = 5000; // 5秒租約

@Override

public CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal) {

// Leader需要確認自己仍然是Leader (租約機制)

if (System.currentTimeMillis() < leaseExpirationTime.get()) {

// 租約有效,可以安全讀取

return CompletableFuture.completedFuture(readFromLocal.get());

} else {

// 租約過期,需要重新獲取多數派確認

return renewLease().thenApply(renewed -> {

if (renewed) {

return readFromLocal.get();

} else {

throw new ConsistencyException("Cannot guarantee linearizable read");

}

});

}

}

private CompletableFuture<Boolean> renewLease() {

// 實際實現中,需要獲取多數派確認

leaseExpirationTime.set(System.currentTimeMillis() + leaderLeaseMs);

logger.info("Renewed leader lease until {}", leaseExpirationTime.get());

return CompletableFuture.completedFuture(true);

}

}

// 順序一致性讀取策略

private class SequentialReadStrategy implements ReadStrategy {

@Override

public CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal) {

// 確保應用了所有已提交的事務

return ensureAppliedUpToDate()

.thenApply(v -> readFromLocal.get());

}

private CompletableFuture<Void> ensureAppliedUpToDate() {

// 實際實現會確保所有已提交的事務都已應用

logger.debug("Ensuring all committed transactions are applied");

return CompletableFuture.completedFuture(null);

}

}

// 讀己所寫策略

private class ReadYourWritesStrategy implements ReadStrategy {

private final ConcurrentMap<String, Long> writeTimestamps = new ConcurrentHashMap<>();

@Override

public CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal) {

// 檢查是否有該key的寫入記錄

Long writeTime = writeTimestamps.get(key);

if (writeTime != null) {

// 確保經過足夠時間,寫入已經完成

long elapsed = System.currentTimeMillis() - writeTime;

if (elapsed < 100) { // 假設100ms足夠寫入完成

try {

Thread.sleep(100 - elapsed);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

}

}

return CompletableFuture.completedFuture(readFromLocal.get());

}

// 記錄寫入操作

public void recordWrite(String key) {

writeTimestamps.put(key, System.currentTimeMillis());

}

}

// 有界陳舊性策略

private class BoundedStalenessStrategy implements ReadStrategy {

private final ConcurrentMap<String, CacheEntry> cache = new ConcurrentHashMap<>();

private final long maxStalenessMs = 1000; // 最大陳舊時間1秒

@Override

public CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal) {

// 檢查緩存

CacheEntry entry = cache.get(key);

if (entry != null) {

long age = System.currentTimeMillis() - entry.getTimestamp();

if (age <= maxStalenessMs) {

// 緩存未過期,直接返回

return CompletableFuture.completedFuture(entry.getResult());

}

}

// 緩存過期或不存在,從本地讀取並更新緩存

Result result = readFromLocal.get();

cache.put(key, new CacheEntry(result, System.currentTimeMillis()));

return CompletableFuture.completedFuture(result);

}

// 定期清理過期緩存

public void cleanup() {

long now = System.currentTimeMillis();

cache.entrySet().removeIf(entry ->

now - entry.getValue().getTimestamp() > maxStalenessMs);

}

}

// 最終一致性策略

private class EventualReadStrategy implements ReadStrategy {

@Override

public CompletableFuture<Result> execute(String key, Supplier<Result> readFromLocal) {

// 直接從本地讀取,不保證看到最新寫入

return CompletableFuture.completedFuture(readFromLocal.get());

}

}

// 緩存條目

private static class CacheEntry {

private final Result result;

private final long timestamp;

public CacheEntry(Result result, long timestamp) {

this.result = result;

this.timestamp = timestamp;

}

public Result getResult() {

return result;

}

public long getTimestamp() {

return timestamp;

}

}

@Override

public void close() {

try {

List<Runnable> pendingTasks = scheduler.shutdownNow();

if (!pendingTasks.isEmpty()) {

logger.warn("Scheduler shutdown with {} pending tasks", pendingTasks.size());

}

if (!scheduler.awaitTermination(5, TimeUnit.SECONDS)) {

logger.warn("Scheduler did not terminate in time");

}

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Interrupted while waiting for scheduler termination");

}

}

// 斷路器實現(更安全的版本)

static class CircuitBreaker {

private final AtomicReference<State> state = new AtomicReference<>(State.CLOSED);

private final AtomicLong failureCount = new AtomicLong(0);

private final AtomicLong lastFailureTime = new AtomicLong(0);

private final int threshold;

private final long resetTimeoutMs;

private final StampedLock stateLock = new StampedLock();

private final Logger logger = LoggerFactory.getLogger(CircuitBreaker.class);

public enum State { CLOSED, OPEN, HALF_OPEN }

public CircuitBreaker(int threshold, long resetTimeoutMs) {

this.threshold = threshold;

this.resetTimeoutMs = resetTimeoutMs;

}

public <T> CompletableFuture<T> execute(Supplier<CompletableFuture<T>> action)

throws CircuitBreakerOpenException {

State currentState = getCurrentState();

if (currentState == State.OPEN) {

// 檢查是否應該嘗試半開狀態

if (System.currentTimeMillis() - lastFailureTime.get() > resetTimeoutMs) {

boolean transitioned = tryTransitionState(State.OPEN, State.HALF_OPEN);

if (!transitioned) {

throw new CircuitBreakerOpenException("Circuit breaker is open");

}

currentState = State.HALF_OPEN;

} else {

throw new CircuitBreakerOpenException("Circuit breaker is open");

}

}

final State executionState = currentState;

try {

CompletableFuture<T> future = action.get();

return future.handle((result, ex) -> {

if (ex != null) {

recordFailure();

throw new CompletionException(ex);

} else {

// 成功執行,重置失敗計數

if (executionState == State.HALF_OPEN) {

tryTransitionState(State.HALF_OPEN, State.CLOSED);

}

failureCount.set(0);

return result;

}

});

} catch (Exception e) {

recordFailure();

throw e;

}

}

private void recordFailure() {

long stamp = stateLock.writeLock();

try {

long failures = failureCount.incrementAndGet();

lastFailureTime.set(System.currentTimeMillis());

if (failures >= threshold && state.get() == State.CLOSED) {

logger.warn("Circuit breaker opening after {} failures", failures);

state.set(State.OPEN);

}

} finally {

stateLock.unlockWrite(stamp);

}

}

private boolean tryTransitionState(State fromState, State toState) {

long stamp = stateLock.writeLock();

try {

if (state.get() == fromState) {

state.set(toState);

logger.info("Circuit breaker state changed from {} to {}", fromState, toState);

return true;

}

return false;

} finally {

stateLock.unlockWrite(stamp);

}

}

// 使用樂觀讀獲取當前狀態

public State getCurrentState() {

long stamp = stateLock.tryOptimisticRead();

State result = state.get();

if (!stateLock.validate(stamp)) {

stamp = stateLock.readLock();

try {

result = state.get();

} finally {

stateLock.unlockRead(stamp);

}

}

return result;

}

}

// 全局異常處理器

static class GlobalExceptionHandler {

private static final Logger logger = LoggerFactory.getLogger(GlobalExceptionHandler.class);

public static <T> CompletableFuture<T> withExceptionHandling(CompletableFuture<T> future) {

return future.exceptionally(e -> {

Throwable cause = e instanceof CompletionException ? e.getCause() : e;

if (cause instanceof ConsistencyException) {

logger.error("Consistency error: {}", cause.getMessage());

} else if (cause instanceof IOException) {

logger.error("I/O error: {}", cause.getMessage());

} else if (cause instanceof InterruptedException) {

Thread.currentThread().interrupt();

logger.warn("Operation interrupted");

} else {

logger.error("Unexpected error: {}", cause.getClass().getName(), cause);

}

throw new CompletionException(cause);

});

}

}

// 指標收集類

private static class MetricsCollector {

private final Counter writeRequests;

private final Counter writeSuccess;

private final Counter writeFailed;

private final Counter writeRejected;

private final Counter batchWrites;

private final Counter batchWriteRequests;

private final Counter readRequests;

private final Map<ConsistencyLevel, Counter> readsByLevel = new EnumMap<>(ConsistencyLevel.class);

private final Histogram writeLatency;

private final Histogram batchWriteLatency;

private final Map<ConsistencyLevel, Histogram> readLatency = new EnumMap<>(ConsistencyLevel.class);

public MetricsCollector(String prefix) {

this.writeRequests = Counter.build()

.name(prefix + "_write_requests_total")

.help("Total number of write requests").register();

this.writeSuccess = Counter.build()

.name(prefix + "_write_success_total")

.help("Total number of successful writes").register();

this.writeFailed = Counter.build()

.name(prefix + "_write_failed_total")

.help("Total number of failed writes").register();

this.writeRejected = Counter.build()

.name(prefix + "_write_rejected_total")

.help("Total number of rejected writes").register();

this.batchWrites = Counter.build()

.name(prefix + "_batch_writes_total")

.help("Total number of batch writes").register();

this.batchWriteRequests = Counter.build()

.name(prefix + "_batch_write_requests_total")

.help("Total number of requests in batch writes").register();

this.readRequests = Counter.build()

.name(prefix + "_read_requests_total")

.help("Total number of read requests").register();

this.writeLatency = Histogram.build()

.name(prefix + "_write_latency_ms")

.help("Write latency in milliseconds").register();

this.batchWriteLatency = Histogram.build()

.name(prefix + "_batch_write_latency_ms")

.help("Batch write latency in milliseconds").register();

// 初始化各一致性級別的計數器和直方圖

for (ConsistencyLevel level : ConsistencyLevel.values()) {

readsByLevel.put(level, Counter.build()

.name(prefix + "_reads_" + level.name().toLowerCase() + "_total")

.help("Total " + level + " reads").register());

readLatency.put(level, Histogram.build()

.name(prefix + "_read_" + level.name().toLowerCase() + "_latency_ms")

.help(level + " read latency in milliseconds").register());

}

}

public void recordSuccessfulWrite(long latencyMs) {

writeRequests.inc();

writeSuccess.inc();

writeLatency.observe(latencyMs);

}

public void recordFailedWrite() {

writeRequests.inc();

writeFailed.inc();

}

public void recordRejectedWrite() {

writeRequests.inc();

writeRejected.inc();

}

public void recordSuccessfulBatchWrite(int batchSize, long latencyMs) {

batchWrites.inc();

batchWriteRequests.inc(batchSize);

writeRequests.inc(batchSize);

writeSuccess.inc(batchSize);

batchWriteLatency.observe(latencyMs);

}

public void recordFailedBatchWrite(int batchSize) {

batchWrites.inc();

batchWriteRequests.inc(batchSize);

writeRequests.inc(batchSize);

writeFailed.inc(batchSize);

}

public void recordRejectedBatchWrite(int batchSize) {

batchWrites.inc();

batchWriteRequests.inc(batchSize);

writeRequests.inc(batchSize);

writeRejected.inc(batchSize);

}

public void recordRead(ConsistencyLevel level, long latencyMs) {

readRequests.inc();

readsByLevel.get(level).inc();

readLatency.get(level).observe(latencyMs);

}

public void recordFailedRead(ConsistencyLevel level) {

readRequests.inc();

// 可以添加失敗計數器

}

}

// 異常類

public static class CircuitBreakerOpenException extends Exception {

public CircuitBreakerOpenException(String message) {

super(message);

}

}

public static class ConsistencyException extends RuntimeException {

public ConsistencyException(String message) {

super(message);

}

}

public static class ProcessingException extends RuntimeException {

public ProcessingException(String message, Throwable cause) {

super(message, cause);

}

}

// 其他內部類和常量定義...

enum ConsistencyLevel {

LINEARIZABLE, // 線性一致性(最強)

SEQUENTIAL, // 順序一致性

READ_YOUR_WRITES, // 讀己所寫

BOUNDED_STALENESS, // 有界陳舊性

EVENTUAL // 最終一致性(最弱)

}

}Fast Leader Election 算法

public class FastLeaderElection {

private final AtomicLong logicalClock = new AtomicLong(0);

private final ConcurrentMap<String, Vote> receivedVotes = new ConcurrentHashMap<>();

private final String serverId;

private final NetworkManager networkManager;

private final int quorumSize;

private final AtomicInteger electionAttempts = new AtomicInteger(0);

private final Logger logger = LoggerFactory.getLogger(FastLeaderElection.class);

private final ZxidUtils zxidUtils;

public FastLeaderElection(String serverId, int quorumSize,

NetworkManager networkManager, ZxidUtils zxidUtils) {

this.serverId = serverId;

this.quorumSize = quorumSize;

this.networkManager = networkManager;

this.zxidUtils = zxidUtils;

}

public String lookForLeader() throws InterruptedException {

MDC.put("component", "fast-leader-election");

MDC.put("serverId", serverId);

try {

// 遞增邏輯時鐘

long newLogicalClock = logicalClock.incrementAndGet();

logger.info("Starting leader election with logical clock: {}", newLogicalClock);

// 初始化選票,投給自己

Vote vote = new Vote(serverId, zxidUtils.getLastZxid(), newLogicalClock);

receivedVotes.clear();

receivedVotes.put(serverId, vote);

// 向所有其他服務器發送選票

networkManager.broadcastVote(vote);

// 選舉超時時間

long startTime = System.currentTimeMillis();

long maxTimeout = 60000; // 60秒最大超時

// 選舉循環

Map<String, Integer> voteCounter = new HashMap<>();

String currentLeader = null;

while (System.currentTimeMillis() - startTime < maxTimeout) {

// 接收選票

Vote receivedVote = networkManager.receiveVote(200); // 200ms超時

if (receivedVote != null) {

MDC.put("candidateId", receivedVote.getServerId());

logger.debug("Received vote from {}: zxid={}, logicalClock={}",

receivedVote.getServerId(),

Long.toHexString(receivedVote.getZxid()),

receivedVote.getLogicalClock());

// 驗證邏輯時鐘

if (receivedVote.getLogicalClock() > newLogicalClock) {

// 發現更高的邏輯時鐘,需要更新自己的時鐘並重新開始選舉

logicalClock.set(receivedVote.getLogicalClock());

logger.info("Found higher logical clock: {}, restarting election",

receivedVote.getLogicalClock());

MDC.remove("candidateId");

electionAttempts.set(0); // 重置嘗試計數

return lookForLeader(); // 重新開始選舉

} else if (receivedVote.getLogicalClock() < newLogicalClock) {

// 忽略舊的邏輯時鐘選票

logger.debug("Ignoring vote with older logical clock: {}",

receivedVote.getLogicalClock());

MDC.remove("candidateId");

continue;

}

// 比較選票

int comparison = compareVotes(vote, receivedVote);

if (comparison < 0) {

// 收到更好的選票,更新自己的選票

vote = new Vote(receivedVote.getServerId(),

receivedVote.getZxid(),

newLogicalClock);

// 重新廣播更新後的選票

networkManager.broadcastVote(vote);

logger.info("Updated vote to server: {}", vote.getServerId());

}

// 記錄收到的選票

receivedVotes.put(receivedVote.getServerId(), receivedVote);

MDC.remove("candidateId");

// 統計票數

voteCounter.clear();

for (Vote v : receivedVotes.values()) {

String candidate = v.getServerId();

voteCounter.put(candidate, voteCounter.getOrDefault(candidate, 0) + 1);

// 檢查是否有候選人獲得多數派支持

if (voteCounter.get(candidate) >= quorumSize) {

currentLeader = candidate;

logger.info("Elected leader: {} with {} votes of {} required",

candidate, voteCounter.get(candidate), quorumSize);

break;

}

}

if (currentLeader != null) {

break; // 選出了Leader

}

}

}

if (currentLeader == null) {

// 處理選舉失敗,使用指數退避避免活鎖

handleElectionFailure();

logger.warn("Failed to elect a leader, retrying...");

return lookForLeader(); // 重試

}

electionAttempts.set(0); // 重置嘗試計數

return currentLeader;

} catch (Exception e) {

logger.error("Error during leader election", e);

// 增加選舉嘗試計數並退避

handleElectionFailure();

throw new LeaderElectionException("Leader election failed", e);

} finally {

MDC.remove("component");

MDC.remove("serverId");

}

}

// 處理選舉失敗,使用指數退避避免活鎖

private void handleElectionFailure() {

int attempts = electionAttempts.incrementAndGet();

// 指數退避

int backoffMs = Math.min(1000 * (1 << Math.min(attempts, 10)), 30000);

// 添加隨機抖動避免同步

backoffMs += ThreadLocalRandom.current().nextInt(backoffMs / 2);

logger.info("Election attempt {} failed, backing off for {}ms", attempts, backoffMs);

try {

Thread.sleep(backoffMs);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

logger.warn("Interrupted during election backoff");

}

}

// 比較兩個選票,返回負數表示v2更好,0表示相等,正數表示v1更好

private int compareVotes(Vote v1, Vote v2) {

// 首先比較zxid,更大的zxid具有更高優先級

long zxidDiff = ZxidUtils.compareZxid(v1.getZxid(), v2.getZxid());

if (zxidDiff != 0) {

return (int) Math.signum(zxidDiff);

}

// zxid相等,比較serverId

return v1.getServerId().compareTo(v2.getServerId());

}

// 內部類和工具方法...

static class Vote {

private final String serverId;

private final long zxid;

private final long logicalClock;

public Vote(String serverId, long zxid, long logicalClock) {

this.serverId = serverId;

this.zxid = zxid;

this.logicalClock = logicalClock;

}

public String getServerId() {

return serverId;

}

public long getZxid() {

return zxid;

}

public long getLogicalClock() {

return logicalClock;

}

@Override

public String toString() {

return "Vote{serverId='" + serverId + "', zxid=" + Long.toHexString(zxid) +

", logicalClock=" + logicalClock + '}';

}

}

// 自定義異常類

public static class LeaderElectionException extends RuntimeException {

public LeaderElectionException(String message, Throwable cause) {

super(message, cause);

}

}

}網絡客户端實現示例

public class NettyNetworkClient implements NetworkClient {

private final EventLoopGroup workerGroup;

private final Bootstrap bootstrap;

private final ConcurrentMap<String, Channel> channels = new ConcurrentHashMap<>();

private final int connectionTimeoutMs;

private final Logger logger = LoggerFactory.getLogger(NettyNetworkClient.class);

public NettyNetworkClient(int connectionTimeoutMs) {

this.connectionTimeoutMs = connectionTimeoutMs;

this.workerGroup = new NioEventLoopGroup();

this.bootstrap = new Bootstrap()

.group(workerGroup)

.channel(NioSocketChannel.class)

.option(ChannelOption.SO_KEEPALIVE, true)

.option(ChannelOption.TCP_NODELAY, true)

.option(ChannelOption.CONNECT_TIMEOUT_MILLIS, connectionTimeoutMs)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) {

ch.pipeline()

.addLast(new LengthFieldBasedFrameDecoder(1048576, 0, 4, 0, 4))

.addLast(new LengthFieldPrepender(4))

.addLast(new PacketEncoder())

.addLast(new PacketDecoder())

.addLast(new ClientHandler());

}

});

}

@Override

public void connect(String serverId, String address, int port) throws IOException {

try {

ChannelFuture future = bootstrap.connect(address, port);

boolean connected = future.await(connectionTimeoutMs, TimeUnit.MILLISECONDS);

if (!connected || !future.isSuccess()) {

throw new IOException("Failed to connect to " + serverId + " at " +

address + ":" + port);

}

channels.put(serverId, future.channel());

logger.info("Connected to server: {} at {}:{}", serverId, address, port);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new IOException("Interrupted while connecting to " + serverId, e);

} catch (Exception e) {

throw new IOException("Failed to connect to " + serverId, e);

}

}

@Override

public void disconnect(String serverId) {

Channel channel = channels.remove(serverId);

if (channel != null) {

channel.close();

logger.info("Disconnected from server: {}", serverId);

}

}

@Override

public ACK sendProposal(String serverId, ProposalPacket proposal) throws IOException {

MDC.put("targetServerId", serverId);

try {

Channel channel = getChannel(serverId);

RequestFuture<ACK> future = new RequestFuture<>();

// 存儲請求-響應映射

Long requestId = generateRequestId();

RequestRegistry.register(requestId, future);

// 包裝請求

Request request = new Request(requestId, RequestType.PROPOSAL, proposal);

// 發送請求

channel.writeAndFlush(request).sync();

// 等待響應

ACK ack = future.get(5, TimeUnit.SECONDS);

if (ack == null) {

throw new IOException("Received null ACK from " + serverId);

}

return ack;

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new IOException("Interrupted while sending proposal to " + serverId, e);

} catch (TimeoutException e) {

throw new IOException("Timed out waiting for ACK from " + serverId, e);

} catch (ExecutionException e) {

throw new IOException("Error sending proposal to " + serverId, e.getCause());

} finally {

MDC.remove("targetServerId");

}

}

@Override

public void sendCommit(String serverId, CommitPacket commit) throws IOException {

MDC.put("targetServerId", serverId);

try {

Channel channel = getChannel(serverId);

// 包裝請求

Request request = new Request(generateRequestId(), RequestType.COMMIT, commit);

// 發送請求 - 不等待響應

channel.writeAndFlush(request);

} catch (Exception e) {

throw new IOException("Error sending commit to " + serverId, e);

} finally {

MDC.remove("targetServerId");

}

}

@Override

public LastZxidResponse sendEpochRequest(String serverId, EpochPacket epochPkt)

throws IOException {

MDC.put("targetServerId", serverId);

try {

Channel channel = getChannel(serverId);

RequestFuture<LastZxidResponse> future = new RequestFuture<>();

// 存儲請求-響應映射

Long requestId = generateRequestId();

RequestRegistry.register(requestId, future);

// 包裝請求

Request request = new Request(requestId, RequestType.EPOCH, epochPkt);

// 發送請求

channel.writeAndFlush(request).sync();

// 等待響應

LastZxidResponse response = future.get(5, TimeUnit.SECONDS);

if (response == null) {

throw new IOException("Received null LastZxidResponse from " + serverId);

}

return response;

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new IOException("Interrupted while sending epoch request to " + serverId, e);

} catch (TimeoutException e) {

throw new IOException("Timed out waiting for LastZxidResponse from " + serverId, e);

} catch (ExecutionException e) {

throw new IOException("Error sending epoch request to " + serverId, e.getCause());

} finally {

MDC.remove("targetServerId");

}

}

// 實現其他接口方法...

@Override

public void sendSnapshot(String serverId, byte[] snapshot, long zxid) throws IOException {

MDC.put("targetServerId", serverId);

try {

Channel channel = getChannel(serverId);

// 由於快照可能很大,按塊發送

int chunkSize = 1024 * 1024; // 1MB塊

int totalChunks = (snapshot.length + chunkSize - 1) / chunkSize;

logger.info("Sending snapshot to {}, size: {} bytes, chunks: {}",

serverId, snapshot.length, totalChunks);

// 發送快照元數據

SnapshotMetadata metadata = new SnapshotMetadata(zxid, snapshot.length, totalChunks);

Request metadataRequest = new Request(generateRequestId(),

RequestType.SNAPSHOT_META, metadata);

channel.writeAndFlush(metadataRequest).sync();

// 分塊發送快照數據

for (int i = 0; i < totalChunks; i++) {

int offset = i * chunkSize;

int length = Math.min(chunkSize, snapshot.length - offset);

byte[] chunk = new byte[length];

System.arraycopy(snapshot, offset, chunk, 0, length);

SnapshotChunk snapshotChunk = new SnapshotChunk(i, totalChunks, chunk);

Request chunkRequest = new Request(generateRequestId(),

RequestType.SNAPSHOT_CHUNK, snapshotChunk);

channel.writeAndFlush(chunkRequest).sync();

if (i % 10 == 0 || i == totalChunks - 1) {

logger.debug("Sent snapshot chunk {}/{} to {}",

i + 1, totalChunks, serverId);

}

}

logger.info("Snapshot sent successfully to {}", serverId);

} catch (Exception e) {

throw new IOException("Error sending snapshot to " + serverId, e);

} finally {

MDC.remove("targetServerId");

}

}

// 獲取連接到指定服務器的通道

private Channel getChannel(String serverId) throws IOException {

Channel channel = channels.get(serverId);

if (channel == null || !channel.isActive()) {

throw new IOException("No active connection to server: " + serverId);

}

return channel;

}

// 生成唯一請求ID

private static final AtomicLong requestIdGenerator = new AtomicLong(0);

private static Long generateRequestId() {

return requestIdGenerator.incrementAndGet();

}

// 關閉客户端

public void shutdown() {

// 關閉所有連接

for (Channel channel : channels.values()) {

channel.close();

}

channels.clear();

// 關閉事件循環組

workerGroup.shutdownGracefully();

}

// 請求類型

enum RequestType {

PROPOSAL, COMMIT, EPOCH, TRUNCATE, TRANSACTION, NEWLEADER, HEARTBEAT,

SNAPSHOT_META, SNAPSHOT_CHUNK

}

// 請求對象

static class Request {

private final Long id;

private final RequestType type;

private final Object payload;

public Request(Long id, RequestType type, Object payload) {

this.id = id;

this.type = type;

this.payload = payload;

}

public Long getId() {

return id;

}

public RequestType getType() {

return type;

}

public Object getPayload() {

return payload;

}

}

// 快照元數據

static class SnapshotMetadata {

private final long zxid;

private final int totalSize;

private final int totalChunks;

public SnapshotMetadata(long zxid, int totalSize, int totalChunks) {

this.zxid = zxid;

this.totalSize = totalSize;

this.totalChunks = totalChunks;

}

public long getZxid() {

return zxid;

}

public int getTotalSize() {

return totalSize;

}

public int getTotalChunks() {

return totalChunks;

}

}

// 快照數據塊

static class SnapshotChunk {

private final int chunkIndex;

private final int totalChunks;

private final byte[] data;

public SnapshotChunk(int chunkIndex, int totalChunks, byte[] data) {

this.chunkIndex = chunkIndex;

this.totalChunks = totalChunks;

this.data = data.clone(); // 防禦性複製

}

public int getChunkIndex() {

return chunkIndex;

}

public int getTotalChunks() {

return totalChunks;

}

public byte[] getData() {

return data.clone(); // 防禦性複製

}

}

// 請求-響應映射註冊表

static class RequestRegistry {

private static final ConcurrentMap<Long, RequestFuture<?>> futures = new ConcurrentHashMap<>();

public static <T> void register(Long requestId, RequestFuture<T> future) {

futures.put(requestId, future);

}

@SuppressWarnings("unchecked")

public static <T> void complete(Long requestId, T response) {

RequestFuture<T> future = (RequestFuture<T>) futures.remove(requestId);

if (future != null) {

future.complete(response);

}

}

public static void completeExceptionally(Long requestId, Throwable exception) {

RequestFuture<?> future = futures.remove(requestId);

if (future != null) {

future.completeExceptionally(exception);

}

}

}

// 請求Future

static class RequestFuture<T> extends CompletableFuture<T> {

// 繼承CompletableFuture,無需額外實現

}

// 客户端處理器

private class ClientHandler extends SimpleChannelInboundHandler<Response> {

@Override

protected void channelRead0(ChannelHandlerContext ctx, Response response) {

Long requestId = response.getRequestId();

if (response.isSuccess()) {

RequestRegistry.complete(requestId, response.getPayload());

} else {

RequestRegistry.completeExceptionally(requestId,

new IOException("Request failed: " + response.getErrorMessage()));

}

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

logger.error("Network client exception", cause);

ctx.close();

}

}

// 響應對象

static class Response {

private final Long requestId;

private final boolean success;

private final Object payload;

private final String errorMessage;

public Response(Long requestId, boolean success, Object payload, String errorMessage) {

this.requestId = requestId;

this.success = success;

this.payload = payload;

this.errorMessage = errorMessage;

}

public Long getRequestId() {

return requestId;

}

public boolean isSuccess() {

return success;

}

public Object getPayload() {

return payload;

}

public String getErrorMessage() {

return errorMessage;

}

}

// 編碼器

static class PacketEncoder extends MessageToByteEncoder<Request> {

@Override

protected void encode(ChannelHandlerContext ctx, Request msg, ByteBuf out) throws Exception {

// 使用協議緩衝區或自定義序列化

// 這裏簡化為示例

byte[] bytes = serializeRequest(msg);

out.writeBytes(bytes);

}

private byte[] serializeRequest(Request request) {

// 實際實現應使用正式的序列化機制

// 這裏簡化為示例

return new byte[0];

}

}

// 解碼器

static class PacketDecoder extends ByteToMessageDecoder {

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

// 使用協議緩衝區或自定義反序列化

// 這裏簡化為示例

if (in.readableBytes() >= 4) { // 至少包含長度字段

in.markReaderIndex();

int length = in.readInt();

if (in.readableBytes() < length) {

in.resetReaderIndex();

return;

}

byte[] data = new byte[length];

in.readBytes(data);

Response response = deserializeResponse(data);

out.add(response);

}

}

private Response deserializeResponse(byte[] data) {

// 實際實現應使用正式的反序列化機制

// 這裏簡化為示例

return null;

}

}

}三、Paxos 算法實現

核心接口定義

// 角色接口定義

public interface Proposer {

CompletableFuture<Boolean> prepare(int ballot);

CompletableFuture<Boolean> propose(int ballot, Object value);

}

public interface Acceptor {

CompletableFuture<Promise> handlePrepare(int ballot);

CompletableFuture<Accepted> handleAccept(int ballot, Object value);

}

public interface Learner {

void learn(long instanceId, int ballot, Object value);

}

public interface NetworkClient {

CompletableFuture<Promise> sendPrepare(int nodeId, int ballot);

CompletableFuture<Accepted> sendAccept(int nodeId, int ballot, Object value);

void sendLearn(int nodeId, long instanceId, int ballot, Object value);

CompletableFuture<Map<Long, PrepareResponse>> sendPrepareAllInstances(int nodeId, int ballot);

CompletableFuture<Void> sendSnapshot(int nodeId, byte[] snapshot, long lastInstanceId);

}

public interface StateMachine {

CompletableFuture<Void> apply(long instanceId, byte[] command);

long getLastApplied();

CompletableFuture<byte[]> takeSnapshot();

CompletableFuture<Void> restoreSnapshot(byte[] snapshot, long instanceId);

}Basic Paxos 實現

public class BasicPaxosNode implements Proposer, Acceptor, Learner, AutoCloseable {

private final int nodeId;

private final AtomicInteger ballot = new AtomicInteger(0);

private volatile Object proposalValue = null;

private final ReadWriteLock rwLock = new ReentrantReadWriteLock();

private volatile int acceptedBallot = 0;

private volatile Object acceptedValue = null;

private final int totalNodes;

private final NetworkClient networkClient;

private final Logger logger = LoggerFactory.getLogger(BasicPaxosNode.class);

private final RetryStrategy retryStrategy;

private final MetricsCollector metrics;

public BasicPaxosNode(int nodeId, int totalNodes, NetworkClient networkClient) {

this.nodeId = nodeId;

this.totalNodes = totalNodes;

this.networkClient = networkClient;

this.retryStrategy = new ExponentialBackoffRetry(100, 5000, 3);

this.metrics = new MetricsCollector("paxos_basic", nodeId);

}

// Proposer: 準備階段

@Override

public CompletableFuture<Boolean> prepare(int suggestedBallot) {

final int newBallot = suggestedBallot > 0 ? suggestedBallot : generateNewBallot();

final Stopwatch stopwatch = Stopwatch.createStarted();

MDC.put("component", "paxos-proposer");

MDC.put("nodeId", String.valueOf(nodeId));

MDC.put("ballot", String.valueOf(newBallot));

logger.info("Starting prepare phase with ballot {}", newBallot);

CompletableFuture<Boolean> result = new CompletableFuture<>();

CompletableFuture.runAsync(() -> {

try {

// 向所有Acceptor發送Prepare請求

List<CompletableFuture<Promise>> futures = sendPrepare(newBallot);

// 收集結果

List<Promise> promises = new ArrayList<>();

for (CompletableFuture<Promise> future : futures) {

try {

Promise promise = future.get(3, TimeUnit.SECONDS);

if (promise != null) {

promises.add(promise);

}

} catch (Exception e) {

logger.warn("Error getting prepare response", e);

}

}

// 如果獲得多數派響應

int quorum = getQuorum();

int okCount = (int) promises.stream().filter(Promise::isOk).count();

if (okCount >= quorum) {

// 更新ballot

ballot.updateAndGet(current -> Math.max(current, newBallot));

// 選擇已接受的最高編號提案的值

Promise highestPromise = selectHighestBallotPromise(promises);

rwLock.writeLock().lock();

try {

if (highestPromise != null && highestPromise.getAcceptedValue() != null) {

proposalValue = highestPromise.getAcceptedValue();

logger.info("Using previously accepted value: {}", proposalValue);

}

} finally {

rwLock.writeLock().unlock();

}

metrics.recordPrepareSuccess(stopwatch.elapsed(TimeUnit.MILLISECONDS));

result.complete(true);

} else {

logger.info("Failed to get quorum in prepare phase: {} of {} responses ok",

okCount, promises.size());

metrics.recordPrepareFailed();

result.complete(false);

}

} catch (Exception e) {

logger.error("Error in prepare phase", e);

metrics.recordPrepareFailed();

result.completeExceptionally(e);

} finally {

MDC.remove("component");

MDC.remove("nodeId");

MDC.remove("ballot");

}

});

return result;

}

// Proposer: 接受階段

@Override

public CompletableFuture<Boolean> propose(int ballot, Object value) {

final Stopwatch stopwatch = Stopwatch.createStarted();

MDC.put("component", "paxos-proposer");

MDC.put("nodeId", String.valueOf(nodeId));

MDC.put("ballot", String.valueOf(ballot));

return prepare(ballot).thenCompose(prepared -> {

if (!prepared) {

logger.info("Prepare phase failed, cannot proceed to propose");

metrics.recordProposeFailed();

return CompletableFuture.completedFuture(false);

}

// 獲取當前要提議的值

final Object valueToPropose;

rwLock.readLock().lock();

try {

// 如果準備階段沒有發現已接受的值,使用提議者的值

valueToPropose = proposalValue != null ? proposalValue : value;

logger.info("Starting accept phase with ballot {} and value {}",

ballot, valueToPropose);

} finally {

rwLock.readLock().unlock();

}

return CompletableFuture.supplyAsync(() -> {

try {

// 向所有Acceptor發送Accept請求

List<CompletableFuture<Accepted>> futures = sendAccept(ballot, valueToPropose);

// 收集結果

List<Accepted> responses = new ArrayList<>();

for (CompletableFuture<Accepted> future : futures) {

try {

Accepted accepted = future.get(3, TimeUnit.SECONDS);

if (accepted != null) {

responses.add(accepted);

}

} catch (Exception e) {

logger.warn("Error getting accept response", e);

}

}

// 如果獲得多數派接受

int quorum = getQuorum();

int accepted = (int) responses.stream().filter(Accepted::isOk).count();

boolean success = accepted >= quorum;

if (success) {

logger.info("Value {} has been accepted by the majority ({} of {})",

valueToPropose, accepted, responses.size());

// 通知所有Learner

broadcastToLearners(1, ballot, valueToPropose);

metrics.recordProposeSuccess(stopwatch.elapsed(TimeUnit.MILLISECONDS));

} else {

logger.info("Failed to get quorum in accept phase: {} of {} responses ok",

accepted, responses.size());

metrics.recordProposeFailed();

}

return success;

} catch (Exception e) {

logger.error("Error in propose phase", e);

metrics.recordProposeFailed();

throw new CompletionException(e);

} finally {

MDC.remove("component");

MDC.remove("nodeId");

MDC.remove("ballot");

}

});

}).exceptionally(e -> {

logger.error("Failed to propose value", e);

metrics.recordProposeFailed();

return false;

});

}

// Acceptor: 處理Prepare請求

@Override

public CompletableFuture<Promise> handlePrepare(int proposalBallot) {

MDC.put("component", "paxos-acceptor");

MDC.put("nodeId", String.valueOf(nodeId));

MDC.put("ballot", String.valueOf(proposalBallot));

return CompletableFuture.supplyAsync(() -> {

Promise promise = new Promise();

rwLock.writeLock().lock();

try {

if (proposalBallot > acceptedBallot) {

// 承諾不再接受編號小於等於proposalBallot的提案

acceptedBallot = proposalBallot;

promise.setOk(true);

promise.setAcceptedBallot(this.acceptedBallot);

promise.setAcceptedValue(this.acceptedValue);

logger.info("Acceptor {} promised ballot {}", nodeId, proposalBallot);

metrics.recordPromiseMade();

} else {

promise.setOk(false);

logger.info("Acceptor {} rejected ballot {}, current ballot: {}",

nodeId, proposalBallot, acceptedBallot);

metrics.recordPromiseRejected();

}

return promise;

} finally {

rwLock.writeLock().unlock();

MDC.remove("component");

MDC.remove("nodeId");

MDC.remove("ballot");

}

});

}

// Acceptor: 處理Accept請求

@Override

public CompletableFuture<Accepted> handleAccept(int proposalBallot, Object proposalValue) {

MDC.put("component", "paxos-acceptor");

MDC.put("nodeId", String.valueOf(nodeId));

MDC.put("ballot", String.valueOf(proposalBallot));

return CompletableFuture.supplyAsync(() -> {

Accepted accepted = new Accepted();

rwLock.writeLock().lock();

try {

if (proposalBallot >= acceptedBallot) {

acceptedBallot = proposalBallot;

acceptedValue = proposalValue;

accepted.setOk(true);

logger.info("Acceptor {} accepted ballot {} with value {}",

nodeId, proposalBallot, proposalValue);

metrics.recordAcceptMade();

} else {

accepted.setOk(false);

logger.info("Acceptor {} rejected accept for ballot {}, current ballot: {}",

nodeId, proposalBallot, acceptedBallot);

metrics.recordAcceptRejected();

}

return accepted;

} finally {

rwLock.writeLock().unlock();

MDC.remove("component");

MDC.remove("nodeId");

MDC.remove("ballot");

}

});

}

// Learner: 學習已決議的值

@Override

public void learn(long instanceId, int ballot, Object value) {

MDC.put("component", "paxos-learner");

MDC.put("nodeId", String.valueOf(nodeId));

MDC.put("instanceId", String.valueOf(instanceId));

MDC.put("ballot", String.valueOf(ballot));

try {

logger.info("Learner {} learned value {} for instance {} with ballot {}",

nodeId, value, instanceId, ballot);

metrics.recordLearnReceived();

// 實際實現中,這裏會將學習到的值應用到狀態機

// applyToStateMachine(instanceId, value);

} finally {

MDC.remove("component");

MDC.remove("nodeId");

MDC.remove("instanceId");

MDC.remove("ballot");

}

}

// 發送Prepare請求給所有Acceptor

private List<CompletableFuture<Promise>> sendPrepare(int newBallot) {

List<CompletableFuture<Promise>> futures = new ArrayList<>();

for (int i = 0; i < totalNodes; i++) {

final int targetNodeId = i;

if (targetNodeId == this.nodeId) {

// 處理本地請求

futures.add(handlePrepare(newBallot));

} else {

// 發送遠程請求

futures.add(networkClient.sendPrepare(targetNodeId, newBallot)

.exceptionally(e -> {

logger.error("Failed to send prepare to node {}", targetNodeId, e);

return null;

}));

}

}

return futures;

}

// 發送Accept請求給所有Acceptor

private List<CompletableFuture<Accepted>> sendAccept(int ballot, Object value) {

List<CompletableFuture<Accepted>> futures = new ArrayList<>();

for (int i = 0; i < totalNodes; i++) {

final int targetNodeId = i;

if (targetNodeId == this.nodeId) {

// 處理本地請求

futures.add(handleAccept(ballot, value));

} else {

// 發送遠程請求

futures.add(networkClient.sendAccept(targetNodeId, ballot, value)

.exceptionally(e -> {

logger.error("Failed to send accept to node {}", targetNodeId, e);

return null;

}));

}

}

return futures;

}

// 通知所有Learner已決議的值

private void broadcastToLearners(long instanceId, int ballot, Object value) {

for (int i = 0; i < totalNodes; i++) {

final int targetNodeId = i;

if (targetNodeId == this.nodeId) {

// 本地學習

learn(instanceId, ballot, value);

} else {

// 異步通知其他Learner

CompletableFuture.runAsync(() -> {

try {

networkClient.sendLearn(targetNodeId, instanceId, ballot, value);

} catch (Exception e) {

logger.error("Failed to notify learner {}", targetNodeId, e);

}

});

}

}

}

// 選擇最高ballot的Promise

private Promise selectHighestBallotPromise(List<Promise> promises) {

return promises.stream()

.filter(p -> p.isOk() && p.getAcceptedValue() != null)

.max(Comparator.comparingInt(Promise::getAcceptedBallot))

.orElse(null);

}

// 生成比當前更大的提案編號 (加入節點ID保證唯一性)

private int generateNewBallot() {

// 確保新ballot大於之前的,並且保證不同節點的ballot唯一

return ballot.incrementAndGet() * totalNodes + nodeId;

}

// 獲取多數派數量

private int getQuorum() {

return totalNodes / 2 + 1;

}

@Override

public void close() {

// 釋放資源

metrics.close();

}

// Promise類

public static class Promise {

private boolean ok;

private int acceptedBallot;

private Object acceptedValue;

public boolean isOk() {

return ok;

}

public void setOk(boolean ok) {

this.ok = ok;

}

public int getAcceptedBallot() {

return acceptedBallot;

}

public void setAcceptedBallot(int acceptedBallot) {

this.acceptedBallot = acceptedBallot;

}

public Object getAcceptedValue() {

return acceptedValue;

}

public void setAcceptedValue(Object acceptedValue) {

this.acceptedValue = acceptedValue;

}

}

// Accepted類

public static class Accepted {

private boolean ok;

public boolean isOk() {

return ok;

}

public void setOk(boolean ok) {

this.ok = ok;

}

}

// 指標收集類

private static class MetricsCollector implements AutoCloseable {

// 指標定義...

public MetricsCollector(String prefix, int nodeId) {

// 初始化指標...

}

public void recordPrepareSuccess(long latencyMs) {

// 記錄準備階段成功

}

public void recordPrepareFailed() {

// 記錄準備階段失敗

}

public void recordProposeSuccess(long latencyMs) {

// 記錄提議階段成功

}

public void recordProposeFailed() {

// 記錄提議階段失敗

}

public void recordPromiseMade() {

// 記錄承諾次數

}

public void recordPromiseRejected() {

// 記錄拒絕承諾次數

}

public void recordAcceptMade() {

// 記錄接受次數

}

public void recordAcceptRejected() {

// 記錄拒絕接受次數

}

public void recordLearnReceived() {

// 記錄學習次數

}

@Override

public void close() {

// 清理資源

}

}

// 異常處理與重試策略

interface RetryStrategy {

<T> CompletableFuture<T> retry(Supplier<CompletableFuture<T>> action);

}

// 指數退避重試策略

static class ExponentialBackoffRetry implements RetryStrategy {

private final long initialBackoffMs;

private final long maxBackoffMs;

private final int maxRetries;

private final Logger logger = LoggerFactory.getLogger(ExponentialBackoffRetry.class);

public ExponentialBackoffRetry(long initialBackoffMs, long maxBackoffMs, int maxRetries) {

this.initialBackoffMs = initialBackoffMs;

this.maxBackoffMs = maxBackoffMs;

this.maxRetries = maxRetries;

}

@Override

public <T> CompletableFuture<T> retry(Supplier<CompletableFuture<T>> action) {

return retryInternal(action, 0);

}

private <T> CompletableFuture<T> retryInternal(Supplier<CompletableFuture<T>> action,

int attempt) {

return action.get().exceptionally(e -> {

if (attempt >= maxRetries) {

throw new CompletionException(

new RetryExhaustedException("Max retries exceeded", e));

}

long backoff = Math.min(initialBackoffMs * (long)Math.pow(2, attempt), maxBackoffMs);

backoff += ThreadLocalRandom.current().nextInt((int)(backoff / 5));

logger.info("Retry attempt {} after {}ms due to: {}",

attempt + 1, backoff, e.getMessage());

return CompletableFuture.delayedExecutor(backoff, TimeUnit.MILLISECONDS)

.execute(() -> retryInternal(action, attempt + 1))

.join();

});

}

}

// 自定義異常類

public static class RetryExhaustedException extends RuntimeException {

public RetryExhaustedException(String message, Throwable cause) {

super(message, cause);

}

}

}Multi-Paxos 實現

下面實現了 Multi-Paxos 的組件化架構,通過分離關注點提高代碼的可維護性:

public class MultiPaxosSystem {

private final int nodeId;

private final Configuration config;

private final MultiPaxosLog log;

private final MultiPaxosStateMachine stateMachine;

private final MultiPaxosNetworking networking;

private final RoleManager roleManager;

private final ScheduledExecutorService scheduler;

private final Logger logger = LoggerFactory.getLogger(MultiPaxosSystem.class);

public MultiPaxosSystem(int nodeId, Configuration config) {

this.nodeId = nodeId;

this.config = config;

this.log = new MultiPaxosLog();

this.stateMachine = new MultiPaxosStateMachine();

this.networking = new MultiPaxosNetworking(nodeId, config.getNodes());

this.roleManager = new RoleManager(this);

this.scheduler = Executors.newScheduledThreadPool(2, r -> {

Thread t = new Thread(r, "multi-paxos-scheduler-" + nodeId);

t.setDaemon(true);

return t;

});

// 啓動日誌應用線程

scheduler.scheduleWithFixedDelay(this::applyCommittedLogs, 100, 100, TimeUnit.MILLISECONDS);

// 啓動Leader租約檢查

scheduler.scheduleWithFixedDelay(this::checkLeaderLease, 1000, 1000, TimeUnit.MILLISECONDS);

}

// 客户端API

// 追加新日誌(寫操作)

public CompletableFuture<Boolean> appendLog(byte[] command) {

if (!roleManager.isLeader()) {

return CompletableFuture.failedFuture(

new NotLeaderException("Not the leader", roleManager.getLeaderHint()));

}

return roleManager.getLeaderRole().appendLog(command);

}

// 讀取操作