01- 什麼是 KEDA ?

KEDA(Kubernetes Event-driven Autoscaling)是一個輕量級的開源組件,用於在 Kubernetes 中實現事件驅動的自動擴縮容。

該項目於 2020.3 被 CNCF 接收,2021.8 開始孵化,最後在 2023.8 宣佈畢業,目前已經非常成熟,可放心在生產環境中使用。

02- KEDA 的原理

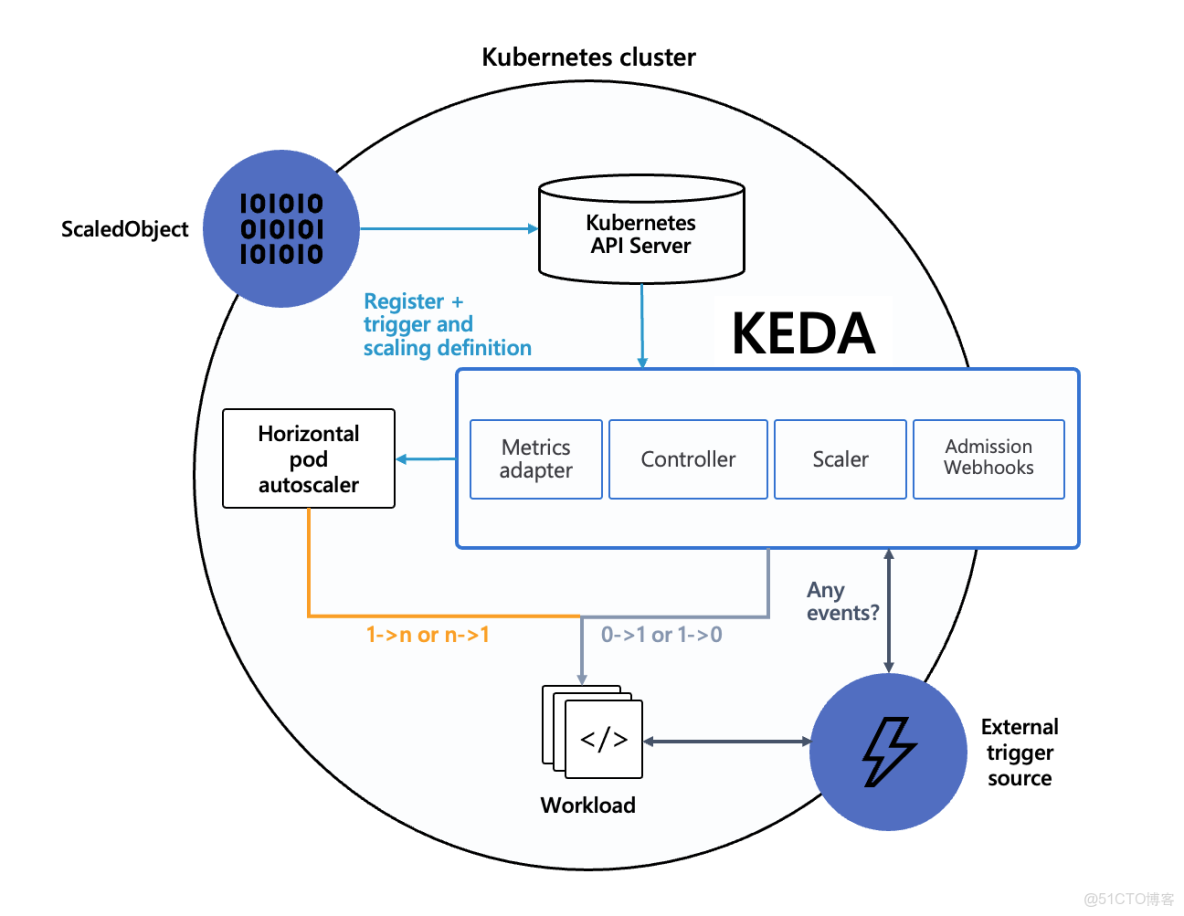

KEDA 並不是要替代 HPA,而是作為 HPA 的補充或者增強,事實上很多時候 KEDA 是配合 HPA 一起工作的,這是 KEDA 官方的架構圖:

Kubernetes 原生的 HPA 主要基於 CPU 和 內存 使用率進行擴縮容。

問題: 很多應用(尤其是消費者服務)並不是因為 CPU 高了才需要擴容,而是因為 “待處理的消息積壓了” 才需要擴容。

KEDA 的解法: KEDA 允許 K8s 根據外部事件(如 Kafka 消息積壓數、Redis 隊列長度、Prometheus 指標等)來調整 Pod 數量。

03 - 哪些場景適合使用 KEDA?

場景一:消息隊列

當隊列中的消息積壓時擴容,處理完後縮容。Kafka, RabbitMQ, RocketMQ等。

比如:一個訂單處理服務。平時只有 1 個 Pod。大促期間,Kafka 中的新訂單Topic 積壓了 10 萬條消息,KEDA 檢測到 lag 值過高,立刻將 Pod 擴容到 50 個以加快消費速度

場景二:基於自定義業務指標

CPU 低不代表負載低。你需要根據業務維度的指標來擴容。通過Prometheus 自定義資源

例如:一個數據庫寫入服務。當 SQL 查詢的響應時間(Latency)超過 500ms,或者數據庫連接池佔用率超過 80% 時,觸發擴容。

04- 使用 helm 部署 KEDA

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

# 部署

helm install keda kedacore/keda --namespace keda --create-namespace

# 查看

kubectl get pods -n keda05- 簡單使用一下KEDA

我們通過設計一個“Hello KEDA”練習

- 瞭解KEDA的原理

外部事件源 → KEDA Scaler → Metrics API → HPA → Deployment → Pods5.1 準備清單文件

準備工作目錄

mkdir KEDA && cd KEDA- 創建名詞空間

kubectl create ns keda-demo --dry-run -oyaml > keda-demo.yml

kubectl apply -f keda-demo.ymlhttp-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: http-app

namespace: keda-demo

spec:

replicas: 1

selector:

matchLabels:

app: http-app

template:

metadata:

labels:

app: http-app

spec:

containers:

- name: http-app

image: hashicorp/http-echo:1.0 # 指定版本確保穩定性

args:

- "-text=Hello, KEDA!"

ports:

- containerPort: 5678

resources:

requests:

cpu: 100m # 必須設置,用於 HPA 計算

memory: 128Mi

limits:

cpu: 200m

memory: 256Mi

---

apiVersion: v1

kind: Service

metadata:

name: http-app-service

namespace: keda-demo

spec:

selector:

app: http-app

ports:

- protocol: TCP

port: 80

targetPort: 5678

type: ClusterIP部署

# 應用配置

kubectl apply -f http-app.yaml

# 驗證部署

kubectl get pods -n keda-demo -l app=http-app測試

kubectl run -it --rm debug --image=busybox:1.28 -n keda-demo -- sh

# 測試

wget -q -O- http://http-app-service

Hello, KEDA!創建 ScaledObject

將以下內容保存為 keda-scaledobject.yaml

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: http-app-scaler

namespace: keda-demo

spec:

# 指定要擴縮容的目標

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: http-app

# 最小和最大副本數

minReplicaCount: 1

maxReplicaCount: 5

# 冷卻時間(秒)

cooldownPeriod: 30 # 縮容前等待時間

pollingInterval: 5 # 檢查指標間隔

# 觸發器配置

triggers:

- type: cpu

metricType: Utilization # 使用 CPU 利用率

metadata:

value: "30" # 當 CPU > 50% 時擴容kubectl apply -f keda-scaledobject.yaml

# 驗證創建

kubectl get scaledobject -n keda-demoKEDA 會自動創建對應的 HorizontalPodAutoscaler:

kubectl get hpa -n keda-demo監控過程

# 實時監控 Pod 數量變化

kubectl get pods -n keda-demo -l app=http-app -w

NAME READY STATUS RESTARTS AGE

http-app-6d867c4494-zs8nn 1/1 Running 0 4m17s

http-app-6d867c4494-sdddz 0/1 Pending 0 0s

http-app-6d867c4494-sdddz 0/1 Pending 0 0s

http-app-6d867c4494-ws8tm 0/1 Pending 0 0s

http-app-6d867c4494-b2frp 0/1 Pending 0 0s

http-app-6d867c4494-ws8tm 0/1 Pending 0 0s

http-app-6d867c4494-b2frp 0/1 Pending 0 0s

http-app-6d867c4494-sdddz 0/1 ContainerCreating 0 0s

http-app-6d867c4494-ws8tm 0/1 ContainerCreating 0 0s

http-app-6d867c4494-b2frp 0/1 ContainerCreating 0 0s

http-app-6d867c4494-sdddz 1/1 Running 0 2s

http-app-6d867c4494-ws8tm 1/1 Running 0 2s

http-app-6d867c4494-b2frp 1/1 Running 0 2s

# 另開終端監控 KEDA 事件

kubectl logs -n keda deployment/keda-operator -f生成負載

kubectl run -i --tty load-generator --image=busybox:1.28 -n keda-demo -- sh

# 在容器內執行(模擬 CPU 壓力)

while true; do

wget -q -O- http://http-app-service

donekubectl get hpa -n keda-demo

[root@k8s-master ~]# kubectl get hpa -n keda-demo

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

keda-hpa-http-app-scaler Deployment/http-app cpu: 111%/30% 1 5 1 99s停止負載生成

按 Ctrl+C 停止腳本,然後執行:

# 退出負載生成容器

exit

# 刪除負載生成 Pod

kubectl delete pod load-generator -n keda-demo等待約 2-3 分鐘(cooldownPeriod + 冷卻時間)

NAME READY STATUS RESTARTS AGE

http-app-6d867c4494-zs8nn 1/1 Running 0 4m17s

http-app-6d867c4494-sdddz 0/1 Pending 0 0s

http-app-6d867c4494-sdddz 0/1 Pending 0 0s

http-app-6d867c4494-ws8tm 0/1 Pending 0 0s

http-app-6d867c4494-b2frp 0/1 Pending 0 0s

http-app-6d867c4494-ws8tm 0/1 Pending 0 0s

http-app-6d867c4494-b2frp 0/1 Pending 0 0s

http-app-6d867c4494-sdddz 0/1 ContainerCreating 0 0s

http-app-6d867c4494-ws8tm 0/1 ContainerCreating 0 0s

http-app-6d867c4494-b2frp 0/1 ContainerCreating 0 0s

http-app-6d867c4494-sdddz 1/1 Running 0 2s

http-app-6d867c4494-ws8tm 1/1 Running 0 2s

http-app-6d867c4494-b2frp 1/1 Running 0 2s

http-app-6d867c4494-sdddz 1/1 Terminating 0 5m15s

http-app-6d867c4494-sdddz 0/1 Terminating 0 5m16s

http-app-6d867c4494-sdddz 0/1 Terminating 0 5m17s

http-app-6d867c4494-sdddz 0/1 Terminating 0 5m17s

http-app-6d867c4494-sdddz 0/1 Terminating 0 5m17s

http-app-6d867c4494-ws8tm 1/1 Terminating 0 5m31s

http-app-6d867c4494-b2frp 1/1 Terminating 0 5m31s

http-app-6d867c4494-ws8tm 0/1 Terminating 0 5m31s

http-app-6d867c4494-b2frp 0/1 Terminating 0 5m31s

http-app-6d867c4494-ws8tm 0/1 Terminating 0 5m32s

http-app-6d867c4494-ws8tm 0/1 Terminating 0 5m32s

http-app-6d867c4494-ws8tm 0/1 Terminating 0 5m32s

http-app-6d867c4494-b2frp 0/1 Terminating 0 5m32s

http-app-6d867c4494-b2frp 0/1 Terminating 0 5m32s

http-app-6d867c4494-b2frp 0/1 Terminating 0 5m32s