1. 部署 KubeDNS 插件

官方的配置文件中包含以下鏡像:

kube-dns ----監聽service、pod等資源,動態更新DNS記錄

sidecar ----用於監控和健康檢查

dnsmasq ----用於緩存,並可從dns服務器獲取dns監控指標

地址:

https://github.com/kubernetes/dns

官方的yaml文件目錄:kubernetes/cluster/addons/dns

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns

系統預定義的 RoleBinding

預定義的 RoleBinding system:kube-dns 將 kube-system 命名空間的 kube-dns ServiceAccount 與 system:kube-dns Role 綁定, 該 Role 具有訪問 kube-apiserver DNS 相關 API 的權限。

# kubectl get clusterrolebindings system:kube-dns -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: 2017-10-31T10:30:29Z

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-dns

resourceVersion: "77"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterrolebindings/system%3Akube-dns

uid: 8483eb4f-be26-11e7-853b-000c297aff5d

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-dns

subjects:

- kind: ServiceAccount

name: kube-dns

namespace: kube-system

下載 Kube-DNS 相關 yaml 文件

# mkdir dns && cd dns

# curl -O https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kube-dns.yaml.base

修改後綴

# cp kube-dns.yaml.base kube-dns.yaml

替換所有的 images

# sed -i 's/gcr.io\/google_containers/192.168.100.100\/k8s/g' kube-dns.yaml

# sed -i "s/__PILLAR__DNS__SERVER__/10.254.0.2/g" kube-dns.yaml

# sed -i "s/__PILLAR__DNS__DOMAIN__/cluster.local/g" kube-dns.yaml

# diff kube-dns.yaml kube-dns.yaml.base

33c33

< clusterIP: 10.254.0.2

---

> clusterIP: __PILLAR__DNS__SERVER__

97c97

< image: 192.168.100.100/k8s/k8s-dns-kube-dns-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

127,128c127

< - --domain=cluster.local.

< - --kube-master-url=http://192.168.100.102:8080

---

> - --domain=__PILLAR__DNS__DOMAIN__.

149c148

< image: 192.168.100.100/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

169c168

< - --server=/cluster.local/127.0.0.1#10053

---

> - --server=/__PILLAR__DNS__DOMAIN__/127.0.0.1#10053

188c187

< image: 192.168.100.100/k8s/k8s-dns-sidecar-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

201,202c200,201

< - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

< - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

---

> - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.__PILLAR__DNS__DOMAIN__,5,SRV

> - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.__PILLAR__DNS__DOMAIN__,5,SRV

説明:

這裏的鏡像我替換為自己部署的鏡像倉庫:如需部署私有鏡像倉庫,請參考Harbor鏡像倉庫部署。

也可以在這裏下載鏡像:

hub.c.163.com/zhijiansd/k8s-dns-kube-dns-amd64:1.14.7

hub.c.163.com/zhijiansd/k8s-dns-sidecar-amd64:1.14.7

hub.c.163.com/zhijiansd/k8s-dns-dnsmasq-nanny-amd64:1.14.7

在我部署 kube-dns 時使用的是1.14.5版本,這時將域名解析記錄由 SRV記錄 更改為 A記錄(使用1.14.7版本不用更改)。

執行該文件

# kubectl create -f kube-dns.yaml

service "kube-dns" created

serviceaccount "kube-dns" created

configmap "kube-dns" created

deployment "kube-dns" created查看 KubeDNS 服務

# kubectl get pods -n kube-system ###查看 kube-system 下的 pod

NAME READY STATUS RESTARTS AGE

kube-dns-7c7674cf68-lcgvc 3/3 Running 0 5m

# kubectl get all --namespace=kube-system

# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 7m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-7c7674cf68 1 1 1 7m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 7m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-7c7674cf68 1 1 1 7m

NAME READY STATUS RESTARTS AGE

po/kube-dns-7c7674cf68-lcgvc 3/3 Running 0 7m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 7m

# kubectl cluster-info ###查看集羣信息

Kubernetes master is running at https://192.168.100.102:6443

KubeDNS is running at https://192.168.100.102:6443/api/v1/namespaces/kube-system/services/kube-dns/proxy

# kubectl get services --all-namespaces |grep dns ###查看集羣服務

kube-system kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 8m

# kubectl get services -n kube-system |grep dns

kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 9m

查看 KubeDNS 守護程序的日誌(如果 kube-dns 有pod沒有起來或者報錯可以使用如下命令排錯)

# kubectl logs --namespace=kube-system $(kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o name) -c kubedns

# kubectl logs --namespace=kube-system $(kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o name) -c dnsmasq

# kubectl logs --namespace=kube-system $(kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o name) -c sidecar

檢查 kube-dns 功能

a.編寫 yaml 文件

# vim my-nginx.yaml

apiVersion: extensions/v1beta1 ###API版本

kind: Deployment ###指定創建資源的角色/類型

metadata: ###資源的元數據/屬性

name: my-nginx ###資源名字,同一個namespace中必須唯一

spec: ###詳細定義該資源

replicas: 1 ###指定rc中pod的個數

template: ###指定rc中pod的模板,rc中的pod都按該模板創建

metadata: ###指定rc中pod的元數據

labels: ###設定資源的標籤

run: my-nginx ###標籤以key/value的結構存在

spec:

containers: ###指定資源中的容器

- name: my-nginx ###容器名

image: 192.168.100.100/library/nginx:1.13.0 ###容器使用的鏡像地址

ports: ###端口映射列表

- containerPort: 80 ###容器需要暴露的端口

b. 執行該文件並查看pod

# kubectl create -f my-nginx.yaml

deployment "my-nginx" created

# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx-7bd7b4dbf-kkbrb 1/1 Running 0 21s

c. 生成服務

# kubectl expose deployment my-nginx --type=NodePort --name=my-nginx

service "my-nginx" exposed

# kubectl describe svc my-nginx |grep NodePort

d. 測試 kube-dns 服務

# kubectl exec -it my-nginx-7bd7b4dbf-kkbrb -- /bin/bash

root@my-nginx-7bd7b4dbf-kkbrb:/# cat /etc/resolv.conf

nameserver 10.254.0.2

search default.svc.cluster.local. svc.cluster.local. cluster.local. localdomain

options ndots:5

root@my-nginx-7bd7b4dbf-kkbrb:/# ping -c 1 my-nginx

PING my-nginx.default.svc.cluster.local (10.254.35.229): 56 data bytes

--- my-nginx.default.svc.cluster.local ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

root@my-nginx-7bd7b4dbf-kkbrb:/# ping -c 1 kube-dns.kube-system.svc.cluster.local

PING kube-dns.kube-system.svc.cluster.local (10.254.0.2): 56 data bytes

--- kube-dns.kube-system.svc.cluster.local ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

root@my-nginx-7bd7b4dbf-kkbrb:/# ping -c 1 kubernetes

PING kubernetes.default.svc.cluster.local (10.254.0.1): 56 data bytes

--- kubernetes.default.svc.cluster.local ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

2. 部署 Heapster 組件

Heapster 是容器集羣監控和性能分析工具,天然的支持 Kubernetes 和 CoreOS。

在每個kubernetes Node上都會運行 Kubernetes 的監控agent---cAdvisor,它會收集本機以及容器的監控數據(cpu,memory,filesystem,network,uptime)。

cAdvisor web界面訪問地址: http://< Node-IP >:4194

Heapster 是一個收集者,將每個 Node 上的 cAdvisor 的數據進行彙總,然後導到第三方工具(如InfluxDB)。

heapter+influxdb+grafana。heapter用來採集信息,influxdb用來存儲,而grafana用來展示信息。

官方配置文件中包含如下鏡像:

heapster

heapster-grafana

heapster-influxdb

官方地址:

https://github.com/kubernetes/heapster/tree/master/deploy/kube-config/

下載 heapster

# wget https://codeload.github.com/kubernetes/heapster/tar.gz/v1.5.0-beta.0 -O heapster-1.5.0-beta.tar.gz

# tar -zxvf heapster-1.5.0-beta.tar.gz

# cd heapster-1.5.0-beta.0/deploy/kube-config

# cp rbac/heapster-rbac.yaml influxdb/

# cd influxdb/

# ls

grafana.yaml heapster-rbac.yaml heapster.yaml influxdb.yaml

更換鏡像地址並執行文件

# sed -i 's/gcr.io\/google_containers/192.168.100.100\/k8s/g' *.yaml

# kubectl create -f .

deployment "monitoring-grafana" created

service "monitoring-grafana" created

clusterrolebinding "heapster" created

serviceaccount "heapster" created

deployment "heapster" created

service "heapster" created

deployment "monitoring-influxdb" created

service "monitoring-influxdb" created

安裝heapster涉及的鏡像下載地址:

hub.c.163.com/zhijiansd/heapster-amd64:v1.4.0

hub.c.163.com/zhijiansd/heapster-grafana-amd64:v4.4.3

hub.c.163.com/zhijiansd/heapster-influxdb-amd64:v1.3.3

檢查執行結果

# kubectl get deployments -n kube-system | grep -E 'heapster|monitoring'

heapster 1 1 1 1 1m

monitoring-grafana 1 1 1 1 1m

monitoring-influxdb 1 1 1 1 1m

檢查 Pods

# kubectl get pods -n kube-system | grep -E 'heapster|monitoring' ###查看pods

heapster-d7f5dc5bf-k2c5v 1/1 Running 0 2m

monitoring-grafana-98d44cd67-nfmmt 1/1 Running 0 2m

monitoring-influxdb-6b6d749d9c-6q99p 1/1 Running 0 2m

# kubectl get svc -n kube-system | grep -E 'heapster|monitoring' ###查看services

heapster ClusterIP 10.254.198.254 <none> 80/TCP 2m

monitoring-grafana ClusterIP 10.254.73.182 <none> 80/TCP 2m

monitoring-influxdb ClusterIP 10.254.143.75 <none> 8086/TCP 2m

# kubectl cluster-info ###查看集羣信息

Kubernetes master is running at https://192.168.100.102:6443

Heapster is running at https://192.168.100.102:6443/api/v1/namespaces/kube-system/services/heapster/proxy

KubeDNS is running at https://192.168.100.102:6443/api/v1/namespaces/kube-system/services/kube-dns/proxy

monitoring-grafana is running at https://192.168.100.102:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

monitoring-influxdb is running at https://192.168.100.102:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy

瀏覽器訪問grafana:

https://master:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

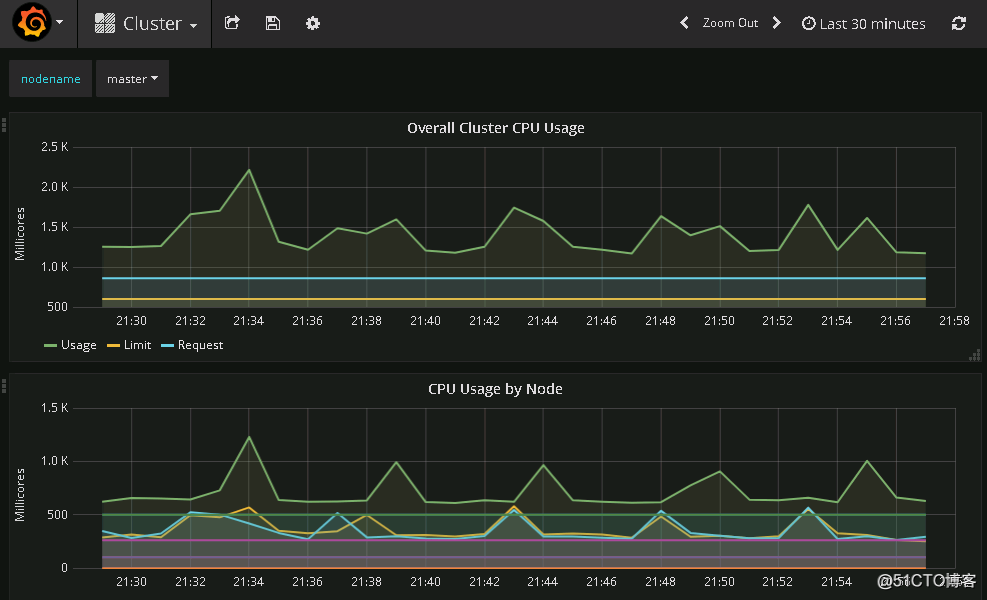

集羣中node節點監控信息如下====>

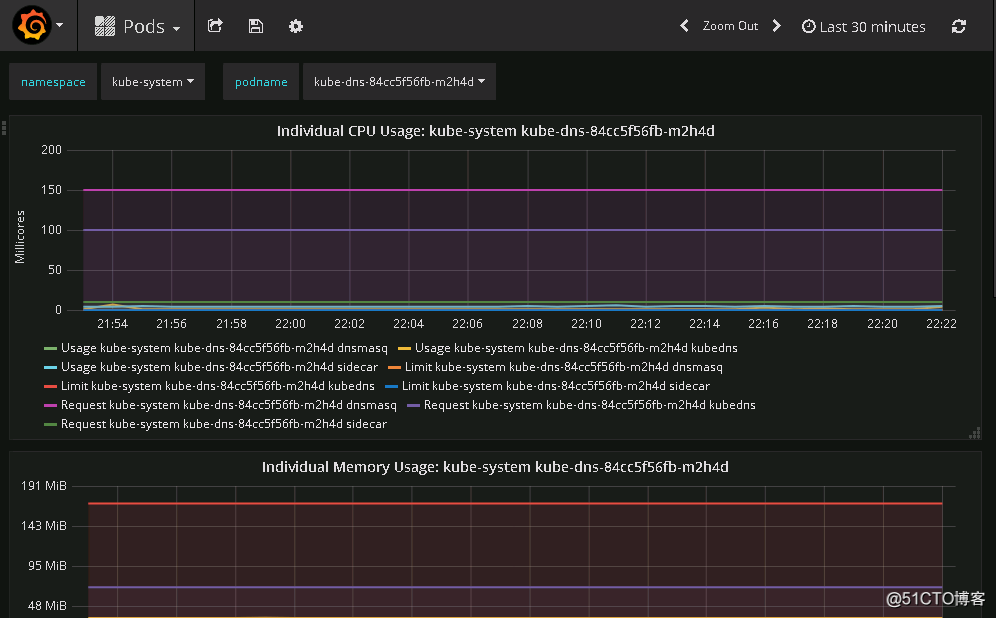

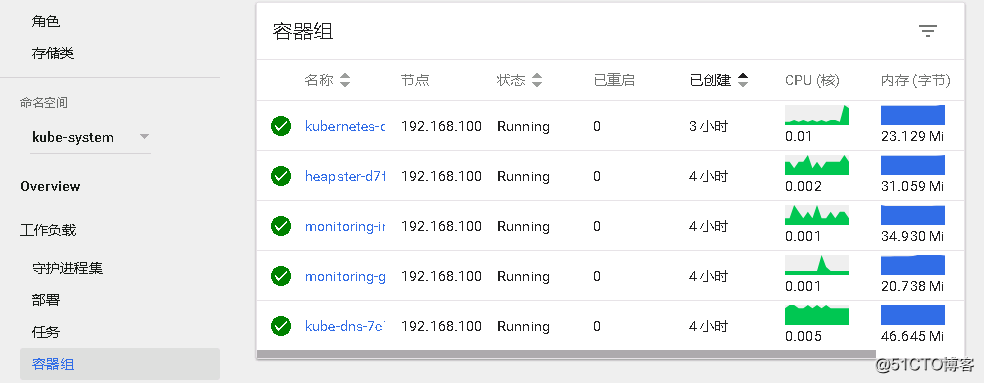

pod相關監控信息如下===>

部署 Kubernetes Dashboard

官方文件地址:

https://github.com/kubernetes/dashboard/tree/master/src/deploy/

如下對訪問控制的解釋是我自行翻譯的,看不懂的請訪問下面的wiki頁自行了解:

從 kubernetes-dashboard 1.7.0 開始只授予了最小的管理權限。

授權由Kubernetes API服務器處理。儀表板僅作為一個代理,並將所有的auth信息傳遞給它。如果禁止訪問,相應的警告將顯示在儀表板中。

WiKi: https://github.com/kubernetes/dashboard/wiki

默認的 Dashboard 權限:

1.在 kube-system 命名空間下 create 的權限,以創建 kubernet-dashboard-key-holder 權限

2.獲取,更新和刪除 kube-system 命名空間中名為 kubernetes-dashboard-key-holder 和 kubernetes-dashboard-certs 的權限

3.獲取和更新 kube-system 命名空間中名為 kubernetes-dashboard-settings 的配置映射的權限

4.代理權限,以允許從 heapster 獲取數據

認證授權

儀表板支持基於:

1.Authorization: Bearer <token>

2.Bearer Token

3.Username/password

4.Kubeconfig

登錄

Login 視圖已在1.7版本中引入,需要通過HTTPS啓用和訪問儀表板。通過HTTPS啓用 --tls-cert-file 和 --tls-cert-key 選項到儀表板。HTTPS端口將在儀表板容器的8443端口上開放,可以通過 --port 來更改。

使用 Skip 選項將使儀表板使用 Service Account 權限登錄。

授權

a.使用Authorization header是使儀表板作為用户訪問HTTP的唯一方法

要使Dashboard使用授權標題,只需將每個請求中的Authorization:Bearer <token>傳遞給Dashboard。這可以通過在儀表板前配置反向代理來實現。代理將負責身份提供者的身份驗證,並將請求頭中生成的令牌傳遞給儀表板。請注意,Kubernetes API服務器需要正確配置才能接受這些令牌。

注:如果通過API服務器代理訪問儀表板,授權標頭將不起作用。訪問儀表板指南中描述的kubectl代理和API服務器訪問儀表板的方式將不起作用。這是因為,一旦請求到達API服務器,所有額外的頭文件被丟棄。

查看 Token

# kubectl -n kube-system get secret

NAME TYPE DATA AGE

default-token-qgzzx kubernetes.io/service-account-token 3 6h

heapster-token-kh678 kubernetes.io/service-account-token 3 5h

kube-dns-token-jkwbf kubernetes.io/service-account-token 3 5h

kubernetes-dashboard-certs Opaque 2 6h

kubernetes-dashboard-key-holder Opaque 2 6h

kubernetes-dashboard-token-x76k5 kubernetes.io/service-account-token 3 6h

b.Bearer Token

參考Kubernetes身份驗證文檔:

https://kubernetes.io/docs/admin/authentication/

c.Basic

默認情況下,Basic authentication是禁用的。原因是Kubernetes API服務器需要配置授權模式 ABAC 和 --basic-auth-file。如果沒有這個API服務器自動退回到anonymous匿名用户,那麼就沒有辦法檢查提供的憑證是否有效。

為了在儀表板中啓用基本的auth,必須配置--authentication-mode=basic命令。默認情況下,設置為--authentication-mode=token。

d.Kubeconfig

為方便起見,提供了這種登錄方法。在kubeconfig文件中只支持由--authentication-mode命令指定的身份驗證選項。如果配置為使用其他方式,則將在儀表板中顯示錯誤。此時不支持外部身份驗證程序或基於證書的身份驗證。

5.Admin privileges

向儀表板的服務帳户授予管理員權限可能是一種安全風險。

您可以通過在ClusterRoleBinding下創建一個完整的管理特權來授予Dashboard的服務帳户。根據選擇的安裝方法複製YAML文件,並保存為例如dashboard-admin.yaml。使用kubectl create -f dashboard-admin.yaml部署它。之後,可以在登錄頁面上使用Skip選項來訪問儀表板。

# vim dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

官方配置文件中包含如下鏡像:

kubernetes-dashboard-init(注:該鏡像只在1.7版本中出現)

kubernetes-dashboard

A.官方推薦版,更嚴格的權限控制

# curl -O https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

B.延續之前版本的新版本

# curl -O https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/alternative/kubernetes-dashboard.yaml

替換 images 並執行文件

# sed -i 's/gcr.io\/google_containers/192.168.100.100\/k8s/g' kubernetes-dashboard.yaml

# kubectl create -f kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role "kubernetes-dashboard-minimal" created

rolebinding "kubernetes-dashboard-minimal" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

安裝kubernetes-dashboard使用的鏡像下載地址:

hub.c.163.com/zhijiansd/kubernetes-dashboard-amd64:v1.8.0

查看相關信息

# kubectl get pods -n kube-system | grep dash ###查看pod

kubernetes-dashboard-7cc94ffffd-n55lf 1/1 Running 0 33s

# kubectl get pod -o wide --all-namespaces ###查看 pod 狀況和其所分佈節點

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default my-nginx-7bd7b4dbf-b6m6q 1/1 Running 1 12h 10.254.95.2 node2

kube-system heapster-d7f5dc5bf-8v452 1/1 Running 1 12h 10.254.59.2 node1

kube-system kube-dns-84cc5f56fb-m2h4d 3/3 Running 3 12h 10.254.80.2 master

kube-system kubernetes-dashboard-78b55c9d4d-bdbp6 1/1 Running 1 12h 10.254.95.3 node2

kube-system monitoring-grafana-98d44cd67-vlc4s 1/1 Running 1 12h 10.254.80.3 master

kube-system monitoring-influxdb-6b6d749d9c-lh9ln 1/1 Running 1 12h 10.254.59.3 node1

# kubectl top node -n kube-system ###顯示CPU、內存、和存儲使用狀況(需安裝heapster)

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 377m 4% 587Mi 7%

node1 185m 2% 371Mi 4%

node2 167m 2% 326Mi 4%

# kubectl top pod -n kube-system

NAME CPU(cores) MEMORY(bytes)

monitoring-influxdb-6b6d749d9c-lh9ln 3m 45Mi

heapster-d7f5dc5bf-8v452 5m 34Mi

kube-dns-84cc5f56fb-m2h4d 5m 36Mi

kubernetes-dashboard-78b55c9d4d-bdbp6 2m 22Mi

monitoring-grafana-98d44cd67-vlc4s 1m 19Mi

瀏覽器訪問:

https://master:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

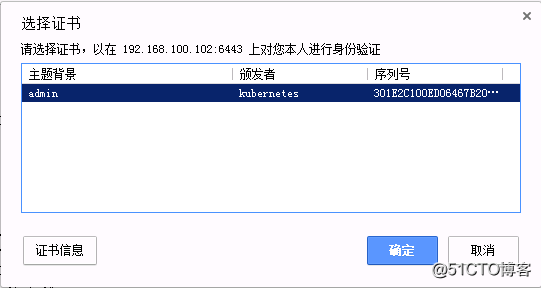

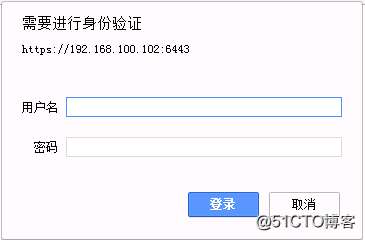

提示選擇證書====>

點擊確定並連接之後提示進行身份驗證====>

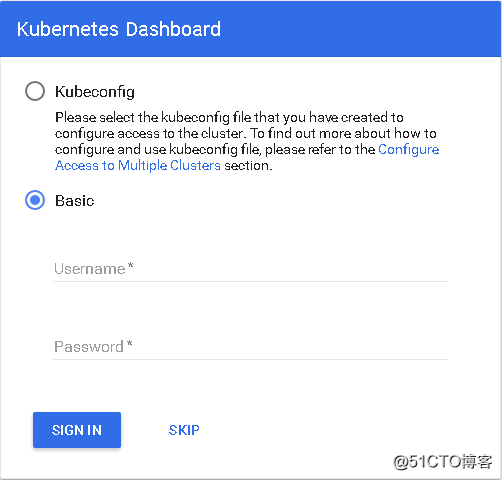

輸入帳號密碼之後彈出窗口,在這裏我們選擇Basic並再此輸入帳號密碼====>

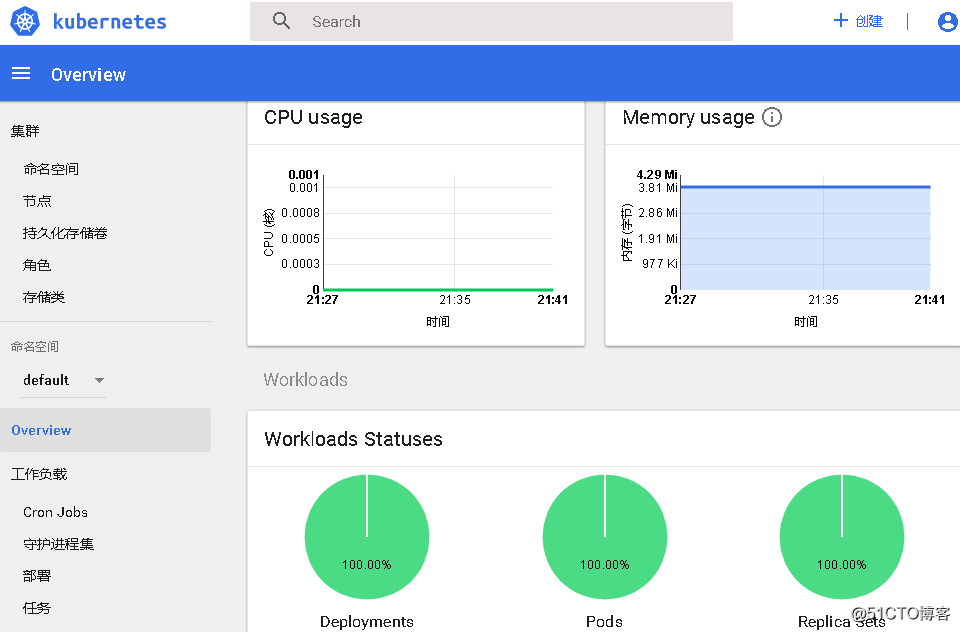

進入默認界面====>

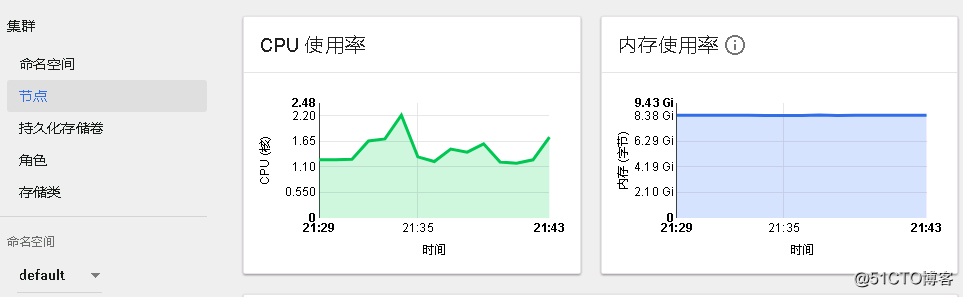

查看節點使用狀況====>

查看pod狀況====>

調度

a.查看當前副本數

# kubectl get rs

NAME DESIRED CURRENT READY AGE

my-nginx-7bd7b4dbf 1 1 1 12h

b.擴容為兩個副本

# kubectl scale --replicas=2 -f my-nginx.yaml

deployment "my-nginx" scaled

c.查看擴容後副本數

# kubectl get rs

NAME DESIRED CURRENT READY AGE

my-nginx-7bd7b4dbf 2 2 2 12h

# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx-7bd7b4dbf-b6m6q 1/1 Running 1 12h

my-nginx-7bd7b4dbf-qk6qp 1/1 Running 0 1m

d.縮減為一個副本

# kubectl scale --replicas=1 -f my-nginx.yaml

deployment "my-nginx" scaled

e.查看縮減後副本數

# kubectl get rs

NAME DESIRED CURRENT READY AGE

my-nginx-7bd7b4dbf 1 1 1 12h

# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx-7bd7b4dbf-b6m6q 1/1 Running 1 12h

f.標記節點為不可調度(新創建的 pod 不會部署在該節點)

# kubectl cordon node2

node "node2" cordoned

# kubectl get node | grep node2

NAME STATUS ROLES AGE VERSION

node2 Ready,SchedulingDisabled <none> 12h v1.8.2

g.將節點上的 pod 平滑移動到其他的節點

# kubectl get pod -o wide --all-namespaces | grep node2

my-nginx-7bd7b4dbf-b6m6q 1/1 Running 1 12h 10.254.95.2 node2

kubernetes-dashboard-7d4d-bdbp6 1/1 Running 1 12h 10.254.95.3 node2

# kubectl drain node2

node "node2" already cordoned

error: pods with local storage (use --delete-local-data to override): kubernetes-dashboard-78b55c9d4d-bdbp6

注:提示 pods 使用的是本地存儲,移動到其他節點將清空數據.

# kubectl drain node2 --delete-local-data

node "node2" already cordoned

WARNING: Deleting pods with local storage: kubernetes-dashboard-78b55c9d4d-bdbp6

pod "my-nginx-7bd7b4dbf-b6m6q" evicted

pod "kubernetes-dashboard-78b55c9d4d-bdbp6" evicted

node "node2" drained

查看節點上是否還存在 pods

# kubectl get pod -o wide --all-namespaces | grep node2

查看 pods 是否已移動到其他節點

# kubectl get pod -o wide --all-namespaces

解鎖(重新上線)被 cordon 的節點

# kubectl uncordon node2

node "node2" uncordoned

# kubectl get node | grep node2

node2 Ready <none> 12h v1.8.2

注: 在部署過程可能有很多問題,如果 pod 不是 running 的,多使用 kubectl logs <pod-name> -n <namespace>來進行排查;如果 pod 是 running 但是無法訪問 pod 的,可能是 proxy 代理問題。

轉載於:https://blog.51cto.com/wangzhijian/2047357