本系列介紹增強現代智能體系統可靠性的設計模式,以直觀方式逐一介紹每個概念,拆解其目的,然後實現簡單可行的版本,演示其如何融入現實世界的智能體系統。本系列一共 14 篇文章,這是第 6 篇。原文:Building the 14 Key Pillars of Agentic AI

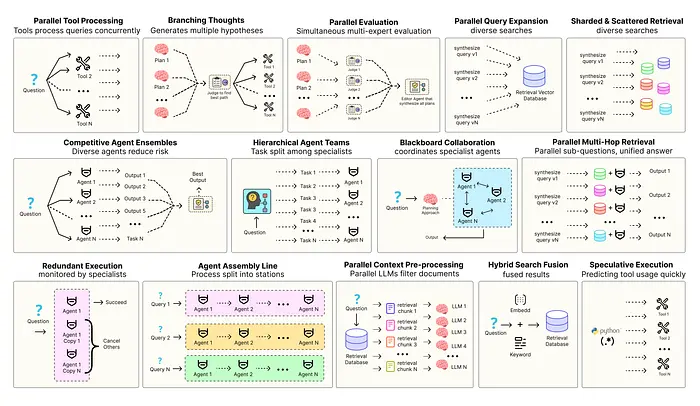

優化智能體解決方案需要軟件工程確保組件協調、並行運行並與系統高效交互。例如預測執行,會嘗試處理可預測查詢以降低延遲,或者進行冗餘執行,即對同一智能體重複執行多次以防單點故障。其他增強現代智能體系統可靠性的模式包括:

- 並行工具:智能體同時執行獨立 API 調用以隱藏 I/O 延遲。

- 層級智能體:管理者將任務拆分為由執行智能體處理的小步驟。

- 競爭性智能體組合:多個智能體提出答案,系統選出最佳。

- 冗餘執行:即兩個或多個智能體解決同一任務以檢測錯誤並提高可靠性。

- 並行檢索和混合檢索:多種檢索策略協同運行以提升上下文質量。

- 多跳檢索:智能體通過迭代檢索步驟收集更深入、更相關的信息。

還有很多其他模式。

本系列將實現最常用智能體模式背後的基礎概念,以直觀方式逐一介紹每個概念,拆解其目的,然後實現簡單可行的版本,演示其如何融入現實世界的智能體系統。

所有理論和代碼都在 GitHub 倉庫裏:🤖 Agentic Parallelism: A Practical Guide 🚀

代碼庫組織如下:

agentic-parallelism/

├── 01_parallel_tool_use.ipynb

├── 02_parallel_hypothesis.ipynb

...

├── 06_competitive_agent_ensembles.ipynb

├── 07_agent_assembly_line.ipynb

├── 08_decentralized_blackboard.ipynb

...

├── 13_parallel_context_preprocessing.ipynb

└── 14_parallel_multi_hop_retrieval.ipynb競爭代理組合

在智能體解決方案中,每個 AI 智能體都有其特定偏見、優勢和劣勢。

使用多樣化代理組合可以降低單一代理產生次優或缺陷輸出的風險。

通過結合多模型或提示策略,系統更具韌性,避免單點故障,更有可能產出高質量輸出。

這種模式相當於人工智能尋求 “第二意見” 或進行競爭性設計流程。

我們將構建三個風格迥異的文案代理組合,負責撰寫產品描述,然後看看評估器如何基於這些代理的輸出進行推理,選擇最佳方案,從而顯著提升質量控制流程。

首先,組合的力量來自其成員的多樣性。我們將利用兩個不同的 LLM 家族創建三種不同的文案“角色”,以最大化多樣性。

from langchain_huggingface import HuggingFacePipeline

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

from langchain_google_vertexai import ChatVertexAI

import torch

# LLM 1: Llama 3 8B Instruct (開源, 通過 Hugging Face 本地部署)

# 為兩個角色賦能

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

hf_model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

load_in_4bit=True

)

pipe = pipeline("text-generation", model=hf_model, tokenizer=tokenizer, max_new_tokens=1024, do_sample=True, temperature=0.7, top_p=0.9)

llama3_llm = HuggingFacePipeline(pipeline=pipe)

# LLM 2: Claude 3.5 Sonnet on Vertex AI (專有, 基於雲服務)

# 使用來自不同廠商的模型和訓練方法引入了顯著的多樣性

claude_sonnet_llm = ChatVertexAI(model_name="claude-3-5-sonnet@001", temperature=0.7)

print("LLMs Initialized: Llama 3 and Claude 3.5 Sonnet are ready to compete.")通過使用兩個完全不同的模型 —— 開源的 Llama 3 和谷歌的 Claude 3.5 Sonnet,確保組合擁有真正的多樣性。這些代理擁有不同寫作風格、知識門檻和固有偏見,而這正是我們想要的強有力競爭過程。

接下來定義 Pydantic 模型,以結構化文案代理和最終評估器的產出。

from langchain_core.pydantic_v1 import BaseModel, Field

from typing import List

class ProductDescription(BaseModel):

"""有標題和正文的結構化產品描述,是文案代理的輸出"""

headline: str = Field(description="A catchy, attention-grabbing headline for the product.")

body: str = Field(description="A short paragraph (2-3 sentences) detailing the product's benefits and features.")

class FinalEvaluation(BaseModel):

"""評估器代理的結構化輸出,包含最佳描述和詳細評論"""

best_description: ProductDescription = Field(description="The winning product description chosen by the judge.")

critique: str = Field(description="A detailed, point-by-point critique explaining why the winner was chosen over the other options, referencing the evaluation criteria.")

winning_agent: str = Field(description="The name of the agent that produced the winning description (e.g., 'Claude_Sonnet_Creative', 'Llama3_Direct', 'Llama3_Luxury').")這些數據模型就是通信協議。

ProductDescription模版確保三家競爭者以相同且一致的格式提交輸出。FinalEvaluation模版更為關鍵,迫使評估器代理不僅要選擇一個winning_agent,還要提供詳細評審(critique),使決策過程透明且可審計。

現在定義 GraphState 和代理節點。節點將通過輔助功能創建,以減少重複代碼。

from typing import TypedDict, Annotated, Dict

import operator

import time

class GraphState(TypedDict):

product_name: str

product_category: str

features: str

# 字典存儲來自競爭代理的結果,並通過 operator.update 合併

competitor_results: Annotated[Dict[str, ProductDescription], operator.update]

final_evaluation: FinalEvaluation

performance_log: Annotated[List[str], operator.add]

# 創建競爭節點的輔助“工廠”函數

def create_competitor_node(agent_name: str, llm, prompt):

# 每個競爭者都是一條鏈:提示詞 -> LLM -> Pydantic 結構化輸出

chain = prompt | llm.with_structured_output(ProductDescription)

def competitor_node(state: GraphState):

print(f"--- [COMPETITOR: {agent_name}] Starting generation... ---")

start_time = time.time()

result = chain.invoke({

"product_name": state['product_name'],

"product_category": state['product_category'],

"features": state['features']

})

execution_time = time.time() - start_time

log = f"[{agent_name}] Completed in {execution_time:.2f}s."

print(log)

# 輸出鍵與代理名稱匹配,以便於聚合

return {"competitor_results": {agent_name: result}, "performance_log": [log]}

return competitor_nodecreate_competitor_node 是構建多元化組合的最簡單方式,輸入名稱、LLM 和提示符,返回一個完備的、支持結構化輸出的 LangGraph 節點。讓我們能夠輕鬆定義三位競爭對手,讓每個都有獨特的模型和個性。

現在創建三個競爭節點和最終評估器節點。

# 使用工廠創建三個競爭節點

# 每個都有不同的模型和提示詞組合,以確保多樣性

claude_creative_node = create_competitor_node("Claude_Sonnet_Creative", claude_sonnet_llm, claude_creative_prompt)

llama3_direct_node = create_competitor_node("Llama3_Direct", llama3_llm, llama3_direct_prompt)

llama3_luxury_node = create_competitor_node("Llama3_Luxury", llama3_llm, llama3_luxury_prompt)

# 評估器節點

def judge_node(state: GraphState):

"""評估所有競爭者的結果並選出獲勝者"""

print("--- [JUDGE] Evaluating competing descriptions... ---")

start_time = time.time()

# 根據評估器提示,將不同描述格式化

descriptions_to_evaluate = ""

for name, desc in state['competitor_results'].items():

descriptions_to_evaluate += f"--- Option from {name} ---\nHeadline: {desc.headline}\nBody: {desc.body}\n\n"

# 創建評估鏈

judge_chain = judge_prompt | llm.with_structured_output(FinalEvaluation)

evaluation = judge_chain.invoke({

"product_name": state['product_name'],

"descriptions_to_evaluate": descriptions_to_evaluate

})

execution_time = time.time() - start_time

log = f"[Judge] Completed evaluation in {execution_time:.2f}s."

print(log)

return {"final_evaluation": evaluation, "performance_log": [log]}現在確定了組合裏的三個代理,有三個不同的文案節點,每個節點都針對不同風格設計,還有一個評估節點負責根據收集到的輸出進行最終關鍵評估。

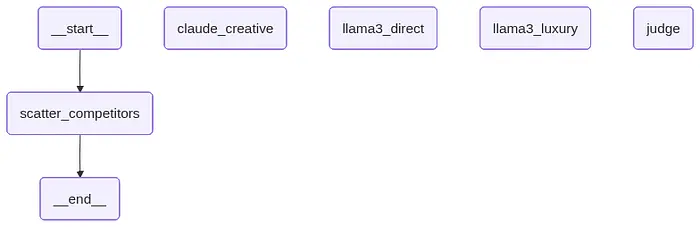

最後用“扇出扇入”架構組裝圖。

from langgraph.graph import StateGraph, END

workflow = StateGraph(GraphState)

# 添加三個競爭節點

workflow.add_node("claude_creative", claude_creative_node)

workflow.add_node("llama3_direct", llama3_direct_node)

workflow.add_node("llama3_luxury", llama3_luxury_node)

# 添加最終評估節點

workflow.add_node("judge", judge_node)

# 入口點是節點列表,告訴 LangGraph 並行運行這些節點

workflow.set_entry_point(["claude_creative", "llama3_direct", "llama3_luxury"])

# 列表中的邊意味着圖在繼續之前會等待所有邊完成,這就是扇入

workflow.add_edge(["claude_creative", "llama3_direct", "llama3_luxury"], "judge")

# 最後一步是評估器決策

workflow.add_edge("judge", END)

app = workflow.compile()現在進行最後的一對一分析,審視這三種競爭性描述及評估器的最終決定,以理解該組合的好處。

import json

print("="*60)

print(" THE COMPETING PRODUCT DESCRIPTIONS")

print("="*60)

for name, desc in final_state['competitor_results'].items():

print(f"\n--- [{name}] ---")

print(f"Headline: {desc['headline']}")

print(f"Body: {desc['body']}")

print("\n" + "="*60)

print(" THE JUDGE'S FINAL VERDICT")

print("="*60)

final_eval = final_state['final_evaluation']

print(f"\nWinning Agent: {final_eval['winning_agent']}\n")

print("Winning Description:")

print(f" - Headline: {final_eval['best_description']['headline']}")

print(f" - Body: {final_eval['best_description']['body']}\n")

print("Judge's Critique:")

print(final_eval['critique'])

print("\n" + "-"*60)

print(" PERFORMANCE ANALYSIS")

print("-"*60)

competitor_times = [7.33, 6.12, 6.45]

judge_time = 8.91

parallel_time = max(competitor_times)

sequential_time = sum(competitor_times)

total_time = parallel_time + judge_time

print(f"\nTotal Execution Time: {total_time:.2f} seconds\n")輸出:

#### 輸出 ####

============================================================

THE COMPETING PRODUCT DESCRIPTIONS

============================================================

--- [Claude_Sonnet_Creative] ---

Headline: Your Life, Unlocked. Your Wellness, Understood.

Body: The Aura Smart Ring is more than a tracker; its your silent wellness partner. Crafted from durable titanium, it deciphers your body signals-sleep, activity, and heart rate-translating them into insights that empower your every day. With a 7-day battery, its always on, always learning, always you.

--- [Llama3_Direct] ---

Headline: Track Everything. Wear Nothing.

Body: Meet the Aura Smart Ring. Get elite sleep and activity tracking, 24/7 heart rate monitoring, and a 7-day battery. Built from tough titanium, it delivers powerful health insights without the bulk of a watch.

--- [Llama3_Luxury] ---

Headline: Master Your Narrative.

Body: For the discerning individual, the Aura Smart Ring is an emblem of effortle...ously engineered from aerospace-grade titanium, it provides a seamless interface to your personal biometrics. Command your well-being with seven days of uninterrupted power and unparalleled insight.

============================================================

THE JUDGES FINAL VERDICT

============================================================

Winning Agent: Claude_Sonnet_Creative

Winning Description:

- Headline: Your Life, Unlocked. Your Wellness, Understood.

- Body: The Aura Smart Ring is more than a tracker; its your silent wellness partner. Crafted from durable titanium, it deciphers your body's signals-sleep, activity, and heart rate-translating them into insights that empower your every day. With a 7-day battery, it's always on, always learning, always you.

------------------------------------------------------------

PERFORMANCE ANALYSIS

------------------------------------------------------------

Total Execution Time: 16.24 seconds最終分析強調了競爭組合模式的兩個關鍵優勢。

- 通過多樣性+評估器實現高質量:三個代理產生了明顯不同的輸出 Llama3_Direct(有力)、Llama3_Luxury(理想)和 Claude_Sonnet_Creative(利益驅動)。這種多樣性為評估器代理提供了更強有力的評估工具。其最終選擇體現了對權衡的明確推理,表明質量來自過程(競爭+評估),而非單一模型。

- 通過並行性實現高性能:所有代理並行運行使流水線速度比順序執行快了 63%,僅以最慢代理的時間開銷,就獲得了多樣化輸出,帶來更高的質量和更高的運行效率。

Hi,我是俞凡,一名兼具技術深度與管理視野的技術管理者。曾就職於 Motorola,現任職於 Mavenir,多年帶領技術團隊,聚焦後端架構與雲原生,持續關注 AI 等前沿方向,也關注人的成長,篤信持續學習的力量。在這裏,我會分享技術實踐與思考。歡迎關注公眾號「DeepNoMind」,星標不迷路。也歡迎訪問獨立站 www.DeepNoMind.com,一起交流成長。

本文由mdnice多平台發佈