聲明:使用的是vs2022版,以下內容如有問題,感謝各位大佬指正!

作業要求:

作業效果:

💡我們需要做的:

關鍵詞:法向量;插值計算;光照模型;凹凸貼圖;位移映射;

1.在rasterizer.cpp中修改:

void rst::rasterizer::rasterize_triangle(const Triangle& t, const std::array<Eigen::Vector3f, 3>& view_pos)

{

// TODO: From your HW3,

// TODO: Inside your rasterization loop:

// * v[i].w() is the vertex view space depth value z.

// * Z is interpolated view space depth for the current pixel

// * zp is depth between zNear and zFar, used for z-buffer

// float Z = 1.0 / (alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());

// float zp = alpha * v[0].z() / v[0].w() + beta * v[1].z() / v[1].w() + gamma * v[2].z() / v[2].w();

// zp *= Z;

// TODO: Interpolate the attributes:

// auto interpolated_color

// auto interpolated_normal

// auto interpolated_texcoords

// auto interpolated_shadingcoords

// Use: fragment_shader_payload payload( interpolated_color, interpolated_normal.normalized(), interpolated_texcoords, texture ? &*texture : nullptr);

// Use: payload.view_pos = interpolated_shadingcoords;

// Use: Instead of passing the triangle's color directly to the frame buffer, pass the color to the shaders first to get the final color;

// Use: auto pixel_color = fragment_shader(payload);

auto v = t.toVector4();//把三角形面片的頂點座標裝入容器

int min_x = INT_MAX;

int max_x = INT_MIN;

int min_y = INT_MAX;

int max_y = INT_MIN;

for (auto point : v)

{

if (point[0] < min_x)min_x = point[0];

if (point[0] > max_x)max_x = point[0];

if (point[1] < min_y)min_y = point[1];

if (point[1] > max_y)max_y = point[1];

}

for (int y = min_y; y <= max_y; y++)

{

for (int x = min_x; x <= max_x; x++)

{

if (insideTriangle((float)x + 0.5, (float)y + 0.5, t.v))

{

//得到點的重心座標

auto abg = computeBarycentric2D((float)x + 0.5, (float)y + 0.5, t.v);

float alpha = std::get<0>(abg);

float beta = std::get<1>(abg);

float gamma = std::get<2>(abg);

//z-buff插值

float w_reciprocal = 1.0 / (alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());//歸一化係數

float z_interpolated = alpha * v[0].z() / v[0].w() + beta * v[1].z() /v[1].w() + gamma * v[2].z() / v[2].w();

z_interpolated *= w_reciprocal;//透視矯正

if (z_interpolated < depth_buf[get_index(x, y)])

{

Eigen::Vector2i p = { (float)x,(float)y };

//顏色插值

auto interpolated_color = interpolate(alpha, beta, gamma, t.color[0], t.color[1], t.color[2], 1);

//法向量插值

auto interpolated_normal = interpolate(alpha, beta, gamma, t.normal[0], t.normal[1], t.normal[2], 1);

//紋理顏色插值

auto interpolated_texcoords = interpolate(alpha, beta, gamma, t.tex_coords[0], t.tex_coords[1], t.tex_coords[2], 1);

//內部點位置插值

auto interpolated_shadingcoords = interpolate(alpha, beta, gamma, view_pos[0], view_pos[1], view_pos[2], 1);

fragment_shader_payload payload(interpolated_color, interpolated_normal.normalized(), interpolated_texcoords, texture ? &*texture : nullptr);

payload.view_pos = interpolated_shadingcoords;

auto pixel_color = fragment_shader(payload);

set_pixel(p, pixel_color);

depth_buf[get_index(x, y)] = z_interpolated;//更新z值

}

}

}

}

}

2.在main.cpp中修改:

分五步:

1.函數 get_projection_matrix() :將你自己之前的實驗中實現的投影矩陣

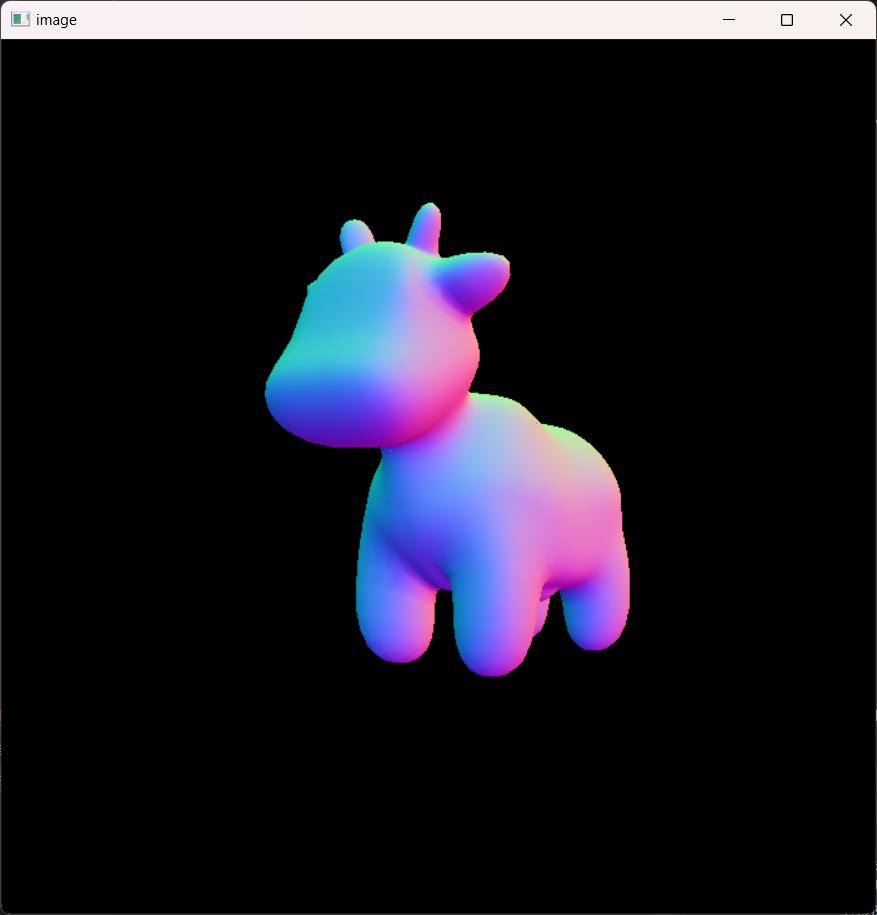

此時運行就是法向量的結果——Normal shader.

igen::Matrix4f get_projection_matrix(float eye_fov, float aspect_ratio, float zNear, float zFar)

{

// TODO: Use the same projection matrix from the previous assignments

Eigen::Matrix4f projection = Eigen::Matrix4f::Identity(); //初始化單位矩陣

Eigen::Matrix4f M_trans; //平移變換矩陣

Eigen::Matrix4f M_persp; //透視變換矩陣

Eigen::Matrix4f M_ortho; //正交變換矩陣

M_persp <<

zNear, 0, 0, 0,

0, zNear, 0, 0,

0, 0, zNear + zFar, -zFar * zNear,

0, 0, 1, 0;

float alpha = 0.5 * eye_fov * MY_PI / 180.0f; //角度制轉換

float yTop = -zNear * std::tan(alpha); //

float yBottom = -yTop;

float xRight = yTop * aspect_ratio;

float xLeft = -xRight;

M_trans <<

1, 0, 0, -(xLeft + xRight) / 2,

0, 1, 0, -(yTop + yBottom) / 2,

0, 0, 1, -(zNear + zFar) / 2,

0, 0, 0, 1;

M_ortho <<

2 / (xRight - xLeft), 0, 0, 0,

0, 2 / (yTop - yBottom), 0, 0,

0, 0, 2 / (zNear - zFar), 0,

0, 0, 0, 1;

M_ortho = M_ortho * M_trans;

projection = M_ortho * M_persp * projection; //矩陣乘法是從右到左

return projection;

}

//運行normal_fragment_shader

r.set_texture(Texture(Utils::PathFromAsset("model/spot/hmap.jpg")));

std::function<Eigen::Vector3f(fragment_shader_payload)> active_shader = normal_fragment_shader;

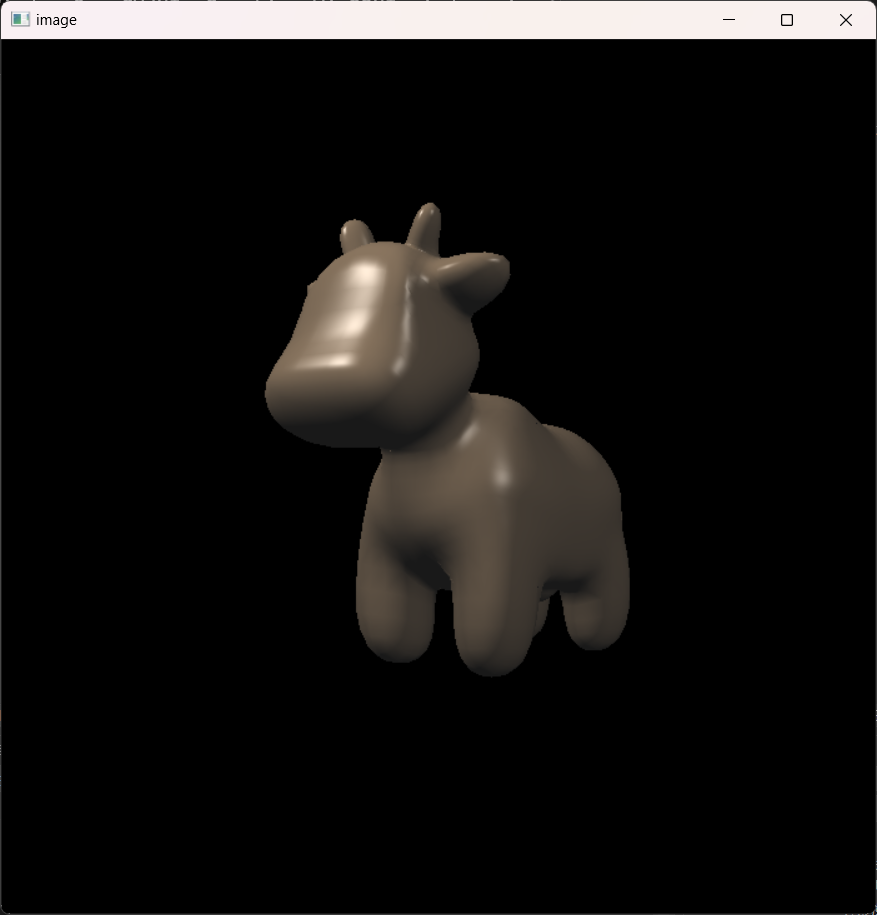

2.函數phong_fragment_shader(): 實現Blinn-Phong模型計算Fragment Color.

Eigen::Vector3f phong_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005); //環境光反射係數

Eigen::Vector3f kd = payload.color; //漫反射係數

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937); //鏡面反射係數

//定義兩個點光源

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10}; //環境光的強度

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150; //高光指數,值越大越集中

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

Eigen::Vector3f result_color = {0, 0, 0};

for (auto& light : lights)

{

// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular*

// components are. Then, accumulate that result on the *result_color* object.

auto v = eye_pos - point; //v為出射光方向(指向眼睛)

auto l = light.position - point; //l為指向入射光源方向

auto h = (v + l).normalized(); //h為半程向量,即v+l歸一化後的單位向量

auto r = l.dot(l); //衰減因子

//環境光

auto ambient = ka.cwiseProduct(amb_light_intensity);

//漫反射

auto diffuse = kd.cwiseProduct(light.intensity / r) * std::max(0.0f, normal.normalized().dot(l.normalized()));

//鏡面反射

auto specular = ks.cwiseProduct(light.intensity / r) * std::pow(std::max(0.0f, normal.normalized().dot(h)), p);

//將光照效果累加

result_color += (ambient + diffuse + specular);

}

return result_color * 255.f;

}

//運行phong_fragment_shader

r.set_texture(Texture(Utils::PathFromAsset("model/spot/hmap.jpg")));

std::function<Eigen::Vector3f(fragment_shader_payload)> active_shader = phong_fragment_shader;

3.函數texture_fragment_shader() :在實現Blinn-Phong模型的基礎上,將紋理顏色是為公式中kd,實現Texture shading Fragment Shader.

Eigen::Vector3f texture_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f return_color = {0, 0, 0};

if (payload.texture)

{

// TODO: Get the texture value at the texture coordinates of the current fragment

//獲取當前片段紋理座標處的紋理值

return_color = payload.texture->getColor(payload.tex_coords.x(), payload.tex_coords.y());

}

Eigen::Vector3f texture_color;

texture_color << return_color.x(), return_color.y(), return_color.z();

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

//漫反射係數直接使用紋理顏色(歸一化到[0,1]範圍)

Eigen::Vector3f kd = texture_color / 255.f;

//鏡面反射係數,控制高光的強度和顏色

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

//提取紋理顏色、片段位置、片段法線

Eigen::Vector3f color = texture_color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

Eigen::Vector3f result_color = {0, 0, 0};

//Blinn-Phong

for (auto& light : lights)

{

// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular*

// components are. Then, accumulate that result on the *result_color* object.

auto v = eye_pos - point; //v為出射光方向(指向眼睛)

auto l = light.position - point; //l為指向入射光源方向

auto h = (v + l).normalized(); //h為半程向量即v+l歸一化後的單位向量

auto r = l.dot(l); //衰減因子

auto ambient = ka.cwiseProduct(amb_light_intensity);

//漫反射,使用紋理顏色

auto diffuse = kd.cwiseProduct(light.intensity / r) * std::max(0.0f, normal.normalized().dot(l.normalized()));

auto specular = ks.cwiseProduct(light.intensity / r) * std::pow(std::max(0.0f, normal.normalized().dot(h)), p);

result_color += (ambient + diffuse + specular);

}

//將顏色從[0,1]範圍轉回[0,255]範圍

return result_color * 255.f;

}

//運行texture_fragment_shader時注意要改紋理文件

//紋理文件hmap.jpg改為spot_texture.png

r.set_texture(Texture(Utils::PathFromAsset("model/spot/spot_texture.png")));

std::function<Eigen::Vector3f(fragment_shader_payload)> active_shader = texture_fragment_shader;

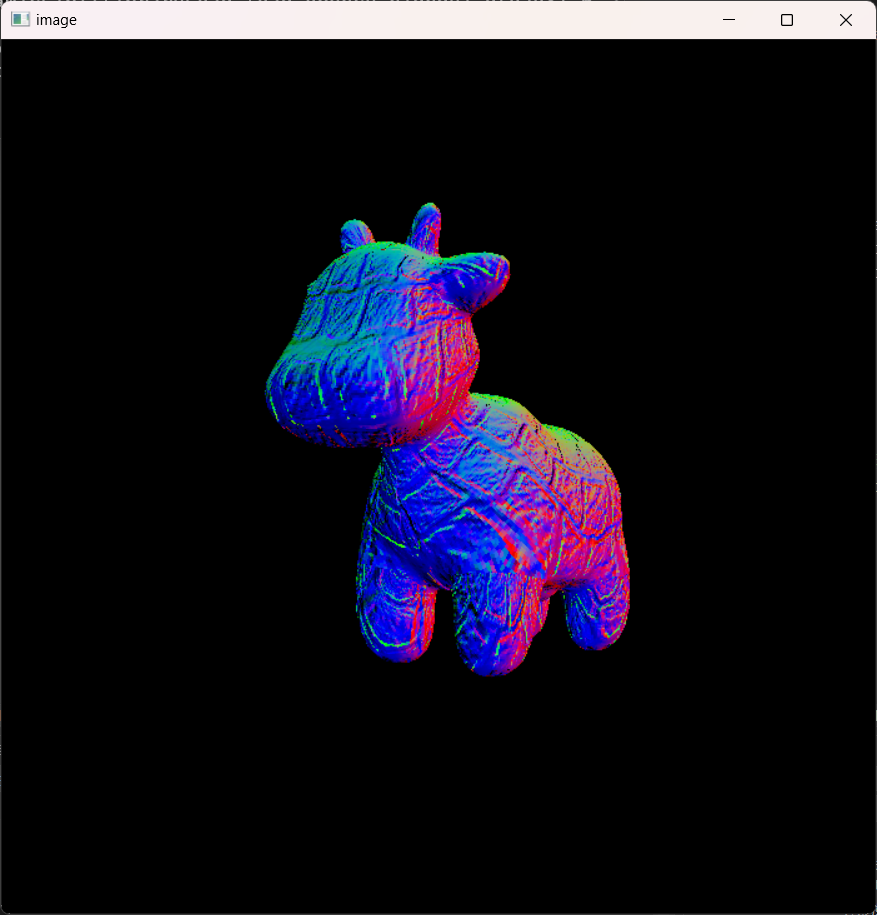

4.函數bump_fragment_shader() :在實現Blinn-Phong模型的基礎上,實現凹凸貼圖bump fragment shader.

核心原理:法線擾動不改變模型的實際幾何形狀,而是通過修改表面法線方向來模擬光照變化。當光線照射到這些 “虛擬” 的凹凸表面時,會產生明暗變化,從而欺騙眼睛感知到表面細節。

Eigen::Vector3f bump_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

Eigen::Vector3f kd = payload.color;

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

//環境光設置

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

//擾動強度參數

//kh:控制高度變化的強度

//kn:控制法線擾動的強度

float kh = 0.2, kn = 0.1;

// TODO: Implement bump mapping here

// Let n = normal = (x, y, z)

// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))

// Vector b = n cross product t

// Matrix TBN = [t b n]

// dU = kh * kn * (h(u+1/w,v)-h(u,v))

// dV = kh * kn * (h(u,v+1/h)-h(u,v))

// Vector ln = (-dU, -dV, 1)

// Normal n = normalize(TBN * ln)

auto x = normal.x();

auto y = normal.y();

auto z = normal.z();

//計算切線向量 t

Eigen::Vector3f t(x * y / sqrt(x * x + z * z), sqrt(x * x + z * z), z * y / sqrt(x * x + z * z));

// 計算副切線向量 b(法線與切線的叉積)

Eigen::Vector3f b = normal.cross(t);

//TBN矩陣: 將紋理座標對應到模型空間中

Eigen::Matrix3f TBN;

TBN <<

t.x(), b.x(), normal.x(),

t.y(), b.y(), normal.y(),

t.z(), b.z(), normal.z();

auto u = payload.tex_coords.x();

auto v = payload.tex_coords.y();

auto w = payload.texture->width;

auto h = payload.texture->height;

// 計算相鄰像素的高度差(使用紋理顏色的範數表示高度)

auto dU = kh * kn * (payload.texture->getColor(u + 1.0f / w, v).norm() - payload.texture->getColor(u, v).norm());

auto dV = kh * kn * (payload.texture->getColor(u, v + 1.0f / h).norm() - payload.texture->getColor(u, v).norm());

// 紋理空間中的擾動法線(Z分量為1,表示向上)

Eigen::Vector3f ln{ -dU,-dV,1.0f };

// 將擾動法線從紋理空間轉換到世界空間

normal = TBN * ln;

Eigen::Vector3f result_color = normal.normalized();//歸一化

return result_color * 255.f;

}

//運行bump_fragment_shader

//紋理文件記得改回hmap.jpg

r.set_texture(Texture(Utils::PathFromAsset("model/spot/hmap.jpg")));

std::function<Eigen::Vector3f(fragment_shader_payload)> active_shader = bump_fragment_shader;

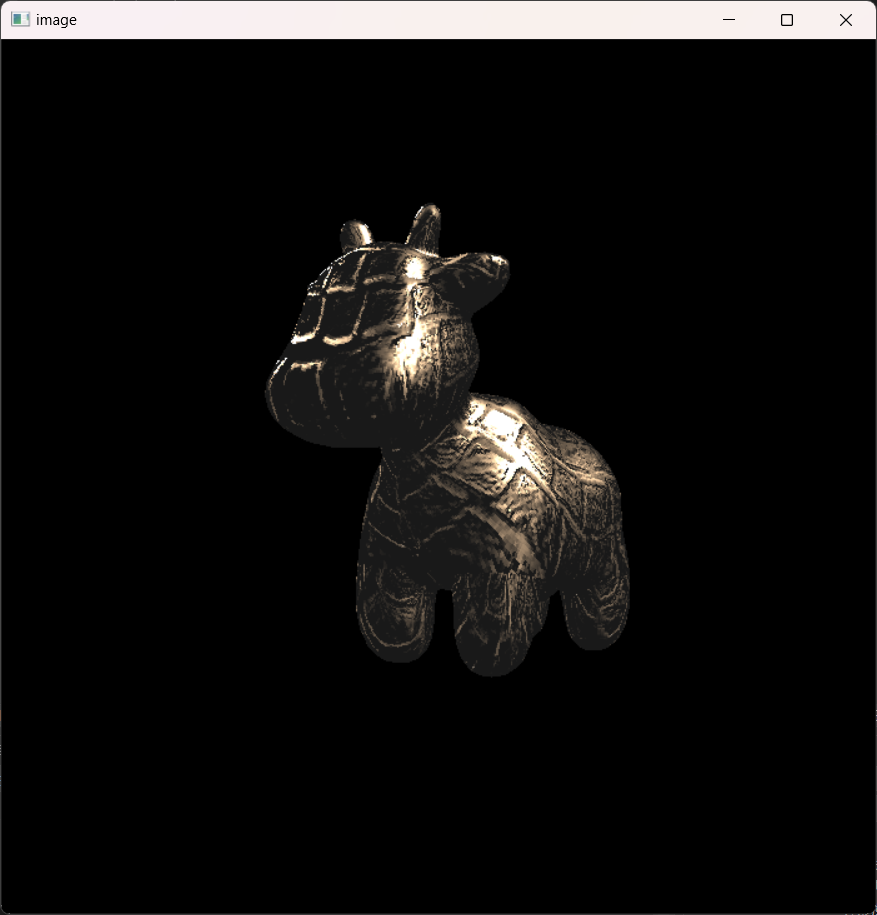

5.函數displacement_fragment_shader():在實現Blinn-Phong模型的基礎上,實現位移映射Displacement fragment shader.

位移映射:與法線擾動不同,位移映射不僅改變表面法線,還實際移動頂點位置,從而創建更真實的凹凸效果,尤其是在邊緣和輪廓處。

核心原理:位移映射通過高度圖(Height Map)修改頂點位置,使平坦的表面在渲染時看起來像有真實的幾何起伏。這需要在着色階段動態調整頂點位置,並相應地更新法線方向。

Eigen::Vector3f displacement_fragment_shader(const fragment_shader_payload& payload)

{

//光照設置

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

Eigen::Vector3f kd = payload.color;

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos; //原始頂點位置

Eigen::Vector3f normal = payload.normal; //原始法線位置

//位移強度參數

float kh = 0.2, kn = 0.1;

// TODO: Implement displacement mapping here

// Let n = normal = (x, y, z)

// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))

// Vector b = n cross product t

// Matrix TBN = [t b n]

// dU = kh * kn * (h(u+1/w,v)-h(u,v))

// dV = kh * kn * (h(u,v+1/h)-h(u,v))

// Vector ln = (-dU, -dV, 1)

// Position p = p + kn * n * h(u,v)

// Normal n = normalize(TBN * ln)

// 計算切線向量 t(沿紋理U方向)

auto x = normal.x();

auto y = normal.y();

auto z = normal.z();

Eigen::Vector3f t(x * y / sqrt(x * x + z * z), sqrt(x * x + z * z), z * y / sqrt(x * x + z * z));

// 計算副切線向量 b(法線與切線的叉積,沿紋理V方向)

Eigen::Vector3f b = normal.cross(t);

// 構建TBN矩陣(將局部座標轉換到世界座標)

Eigen::Matrix3f TBN;

TBN <<

t.x(), b.x(), normal.x(),

t.y(), b.y(), normal.y(),

t.z(), b.z(), normal.z();

// 獲取紋理座標和尺寸

auto u = payload.tex_coords.x();

auto v = payload.tex_coords.y();

auto w = payload.texture->width;

auto h = payload.texture->height;

// 計算相鄰像素的高度差(用於法線計算)

auto dU = kh * kn * (payload.texture->getColor(u + 1.0f / w, v).norm() - payload.texture->getColor(u, v).norm());

auto dV = kh * kn * (payload.texture->getColor(u, v + 1.0f / h).norm() - payload.texture->getColor(u, v).norm());

Eigen::Vector3f ln{ -dU,-dV,1.0f };

// 沿法線方向移動頂點,實現"位移"效果

point += (kn * normal * payload.texture->getColor(u, v).norm());

// 將擾動法線從紋理空間轉換到世界空間

normal = TBN * ln;

normal.normalized();

//Phong 光照模型

Eigen::Vector3f result_color = { 0, 0, 0 };

for (auto& light : lights)

{

// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular*

// components are. Then, accumulate that result on the *result_color* object.

auto v = eye_pos - point; //v為出射光方向(指向眼睛)

auto l = light.position - point; //l為指向入射光源方向

auto h = (v + l).normalized(); //h為半程向量即v+l歸一化後的單位向量

auto r = l.dot(l); //衰減因子

auto ambient = ka.cwiseProduct(amb_light_intensity);

auto diffuse = kd.cwiseProduct(light.intensity / r) * std::max(0.0f, normal.normalized().dot(l.normalized()));

auto specular = ks.cwiseProduct(light.intensity / r) * std::pow(std::max(0.0f, normal.normalized().dot(h)), p);

result_color += (ambient + diffuse + specular);

}

return result_color * 255.f;

}

//運行displacement_fragment_shader

r.set_texture(Texture(Utils::PathFromAsset("model/spot/hmap.jpg")));

std::function<Eigen::Vector3f(fragment_shader_payload)> active_shader = displacement_fragment_shader;

[提高項 3分] :嘗試更多模型: 找到其他可用的.obj 文件,提交渲染結果並把模型保存在 /models 目錄下。這些模型也應該包含 Vertex Normal 信息。

[提高項 5分]:雙線性紋理插值: 使用雙線性插值進行紋理採樣, 在 Texture類中實現一個新方法 Vector3f getColorBilinear(float u, float v) 並通過 fragment shader 調用它。為了使雙線性插值的效果更加明顯,你應該考慮選擇更小的紋理圖。請同時提交紋理插值與雙線性紋理插值的結果,並進行比較。